Whatever happens always happens on time.

Zen quote about software delivery

This article is a continuation of the discussion about NFRs of being Native to the Cloud

The scale of things to come

The set of complexities that you have to manage in your system, from its inception to the end of days, is immense. It represents a summary of its architecture, the way it is built, a structure that creates and delivers it, and the structure that is being serviced by it.

I want to be fully focused on non-functional requirements in this article, so the “the way it is built” part will be avoided, we are currently not interested in software itself, just surrounding stuff - the footprint of its life.

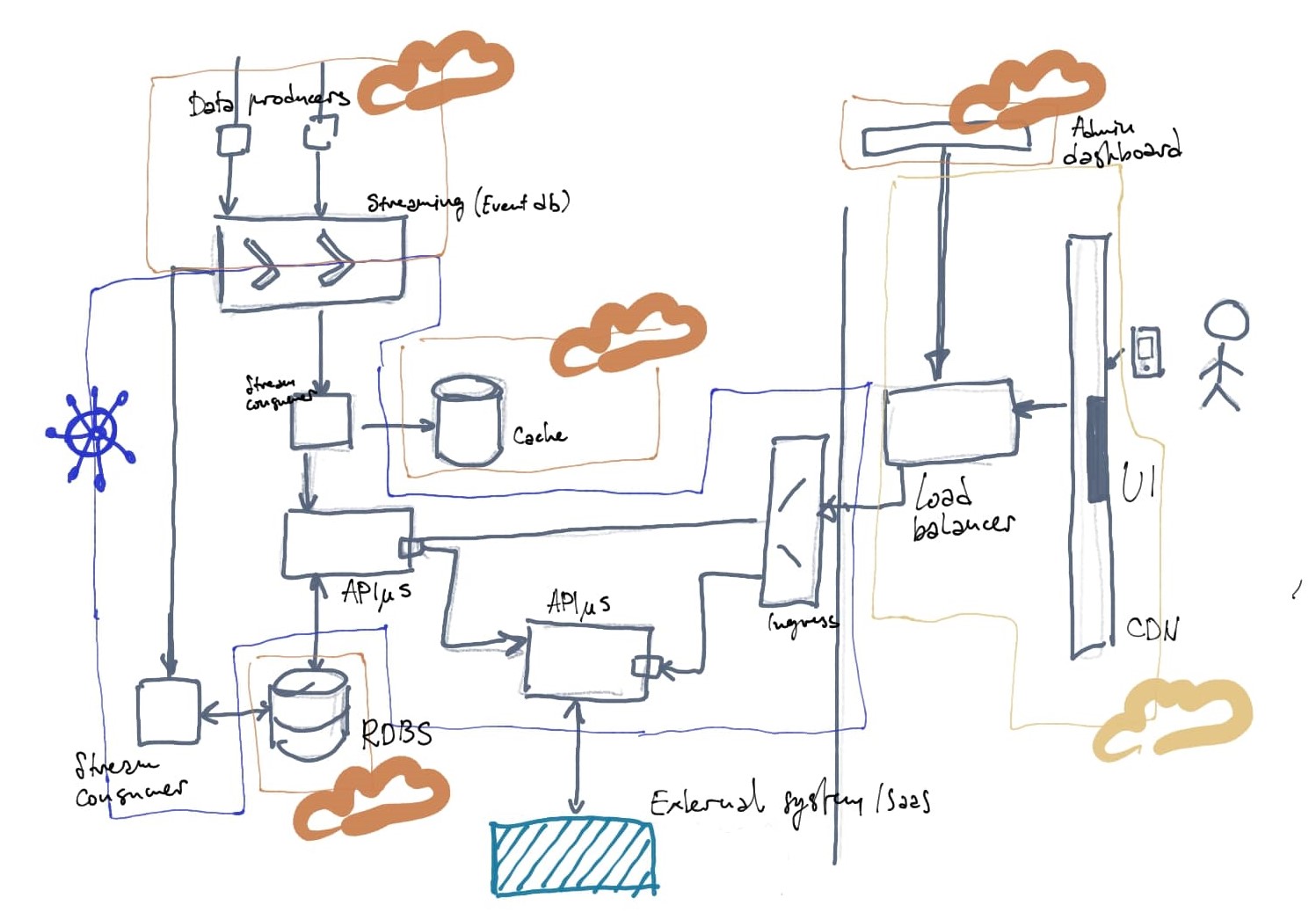

The architecture of the system defines the end-to-end structure of the system, the layout of components, and their correlation. It defines events and data flows and makes the footprint in the infrastructure. This is the part that gets deployed to Kubernetes and other connected systems.

In the picture - Microservice architecture with event-driven components and two UIs, which would be considered medium complexity projects at the start. The central piece is handled by Kubernetes while there are few mounted components from cloud solutions and CDN to handle static content (UI) and caching.

I intend to analyze most of the complexities of setting up and running the above and similar systems through a series of articles I am writing on the topic of non-functional requirements (NFR). I want to give more concrete approaches and more clarity on the implications of every approach and decision in this space. To make things easier, I will probably make some choices, like the choice to do everything in the Kubernetes space.

Fluidity

Further on, I would like you to understand that your system will, in most cases, have to handle the fluid nature of software architecture: you have to be ready for ever-changing software design (emergent design). And there we get to the actual problem space.

Emergent design- The idea is that the design of the system emerges (and changes) little by little, in small increments - in the same way, I hope your development process is organized.

Effects of emergent design manifest on all aspects of your software’s development, delivery, and lifecycle. This is continuously changing NFR problem space, which consequentially requires you to continuously adapt your solution. The actual problem we are solving is represented by twofold complexity you are bound to manage till the end of the day. These two parts of the problem are best analyzed and tackled together, through one thought process.

Over a period of many years I have spent working on all the aspects of software, I ended up calling this “the two scales of devops”. Of course, scales are complexity and volume measurements of particular software aspects:

- The development and delivery - the scale of development.

- The production part - the scale of life.

This problem of two scales, as I would usually call it, has to be solved day in and day out, which means that, in our case, it becomes a paradigm - we want to discuss and collect some established patterns for solving its usual components.

The first scale

The scale of development is about providing a good development experience at any pace, about development tooling, about flow efficiency, security, and ease of onboarding. You measure the quality of the solution in this area through build times, lead times to production, testing capabilities, release confidence, employee satisfaction (eNPS?), security, and process transparency metrics.

Flow efficiency - every workflow is composed of periods of problem-solving focused work and everything else (less important work and wait periods), the efficiency of the flow is the coefficient that shows what percentage of all the time you spend in the flow is actual problem-solving work.

At this side of the equation, structure and method of delivery is the central point of the solution, the focus is mostly not on tools (apart from Kubernetes and few chosen specifics just for this analysis to even be possible), but on a way to utilize set of tools you chose to best manage any development process.

One of the key points of any analysis like this is actually leaving the solution open-ended to enable the development team to accommodate for new use cases.

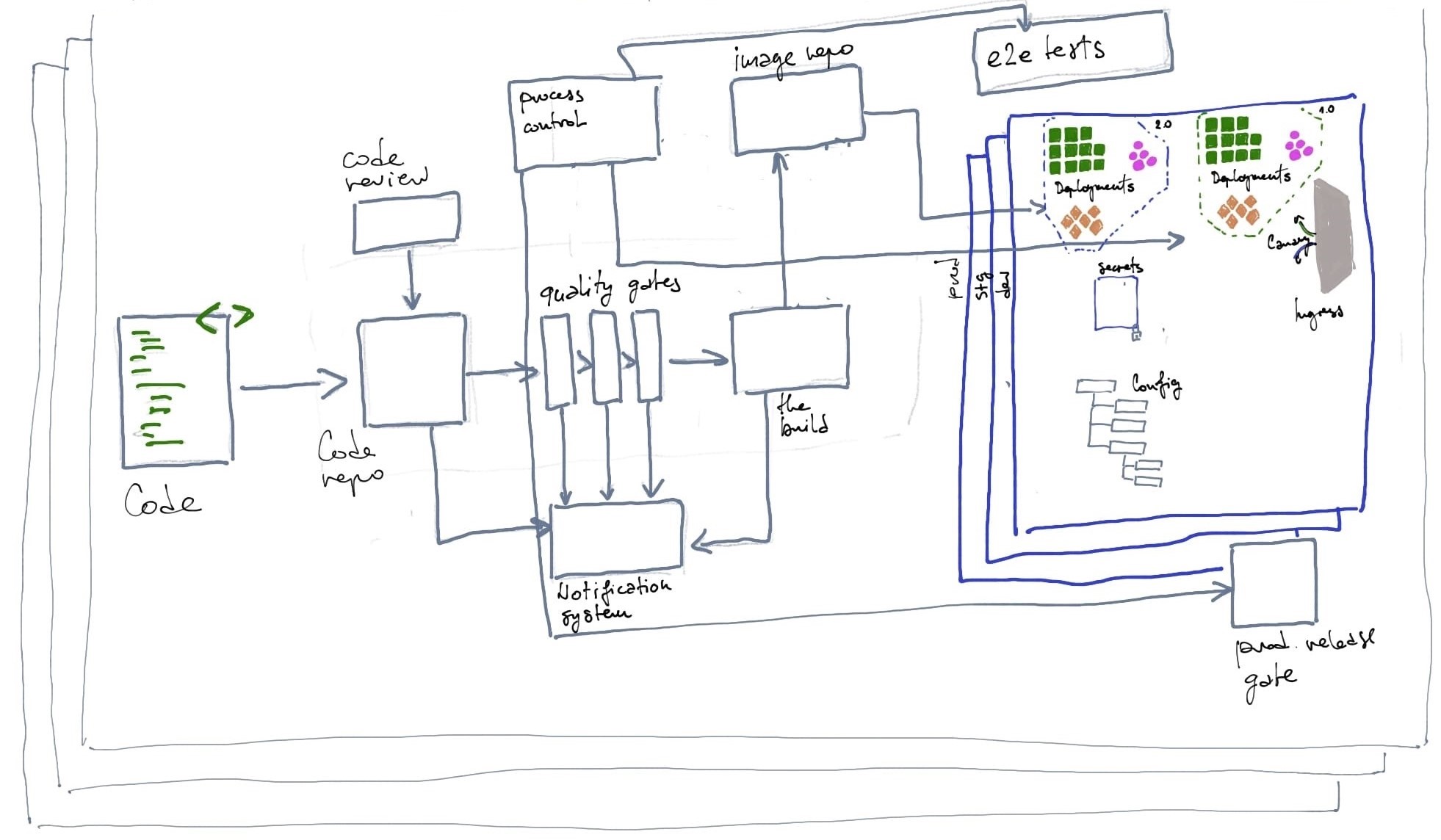

In the picture - Developing and delivering a system with certainty and high quality is quite a complex set of tasks and problems on its own.

In the picture - Developing and delivering a system with certainty and high quality is quite a complex set of tasks and problems on its own.

Creating fast and resilient tooling to deliver your application smoothly, and allow flexibility to set up a good process around it is the key. It is about technically enabling your engineering team and setting the whole team up for success.

KPIs - ultimately, our solutions on this side would be measured by three main metrics:

- Lead Time for a change - mostly relaying on flow efficiency (less approvals needed, less process bottlenecks of other kinds) and technical characteristics of a build process (faster builds, faster quality checks…)

- Change failure rate - relying on QA and SRE, mostly backed by Defect Detection Percentage (DDP) that makes sure to detect as many as possible quality issues before code hits production, and MttR (Minimum time to Resolve) that makes sure that we can notice problems in prod earlier and solve them using various automatic or one-touch recovery tools.

- Deployment frequency - relying on quality and flexibility of the process and technical solutions with focus to make changes smaller and more frequent.

Good further read on this topic is Accelerate, the book which explains the approaches around building annual State of DevOps report.

The second scale

This side of your system takes the software over after it is delivered to production, it is the area where the long term stability of the app is defined. It is about a long term perspective of running it in production.

The scale of life is focused on operation, stability (resilience), security, and capacity planning. You measure the quality in this area through SLOs, API contract tests, quality of service, and incident response metrix (MttX).

This scale is about serving the end-user with a high-quality product, it is about the business value we are delivering and how to serve it in the best way.

Deeper down it is all about operational tooling, telemetry, good capacity planning, and other more specific SRE approaches.

The DevOps and SRE

KPIs and signals - KPIs (key performance indicators) of your service can be observed through two lenses:

- Operational - how good capabilities and people are working together, which is measured through Minimum time to Detect, Diagnose and Resolve a problem (MttX where X can be D for detect, R for resolve and many more based on what you want to measure). Signals to measure here are partly communicational - how automated the system is and how close are the teams involved, and partly in following category.

- Application - Quality on Service level, which is measured through service level objectives (how we want it to perform), indicators (what system metrics we use to determine this performance) and agreements /contract that we make with our clients in regards to how our app should perform (SLX). Signals we observe and combine in these case are also known as four golden signals - error rate, latency, traffic and saturation.

In order to efficiently handle the problem space we just defined, the team has to be able and empowered to fully own everything they are building. The team should also own the process, and sometimes the team itself. Depending on the size of the scope, the team, and the company, it might not be the same team owning the whole thing end to end, it might be a couple of teams working together in certain, hopefully, efficient collaboration.

This approach of high ownership is better known as DevOps, the concept aiming to blur the boundaries between software development, delivery, and operation, bringing it closer together, with the goal to make software development and delivery a lot more efficient and high quality.

SRE - site reliability engineering is a set of software engineering practices focused on infrastructure reliability and operations. It is born from the direct application of DevOps methodologies on live systems with a heavy focus on automation and observability. SRE focused teams usually split their focus roughly 50-50 between software engineering (building tools, features, or automation), and operations/support tasks (like incident handling, on-call, and so on).

Good further read on this topic and many other topics that I write about can be found in the SRE book

To tame the scales

Goal of delivering high-quality software as soon as possible represents both scales. It puts focus on smooth delivery of your software and making sure it is reliable (high quality). Taking DevOps concepts and more closely applying them to live systems belongs to the site reliability engineering (the SRE).

Systems that are native to the cloud are able to adopt DevOps approaches faster than others through building tools and capabilities (like on-demand infrastructure for engineers, fast and safe releases, security-first design…).

If built correctly, these systems often have the right tool for the given task, deliver continuously with low to no risk, and are generally more open-ended architecture wise, fully manifesting DevOps culture.

Looking from the practical standpoint, and especially in Cloud Native systems, DevOps and SRE practically mean the same thing. SRE pays less attention to how software is being delivered (first scale) and focuses heavily on applying the DevOps methodologies on live systems (second scale).

It all ultimately means that your team is able to pick up the software at its inception, develop it, efficiently deliver it to production, and afterward also operate at a high level of reliability while continuing to deliver new business value at a steady pace throughout the whole lifecycle of the system.

Keeping the system available and QoS high in many cases brings more business value than a new feature you would release. If you can’t have both, stick to quality.

DevOps and SRE on Kubernetes

Kubernetes’ architecture and the area of technical solution it covers with its core functionality is already a big incentive to plan for a full DevOps approach - it would actually be harder to not do DevOps. This also goes for a big part of the Cloud space, which makes Cloud-Native concepts a big DevOps driver.

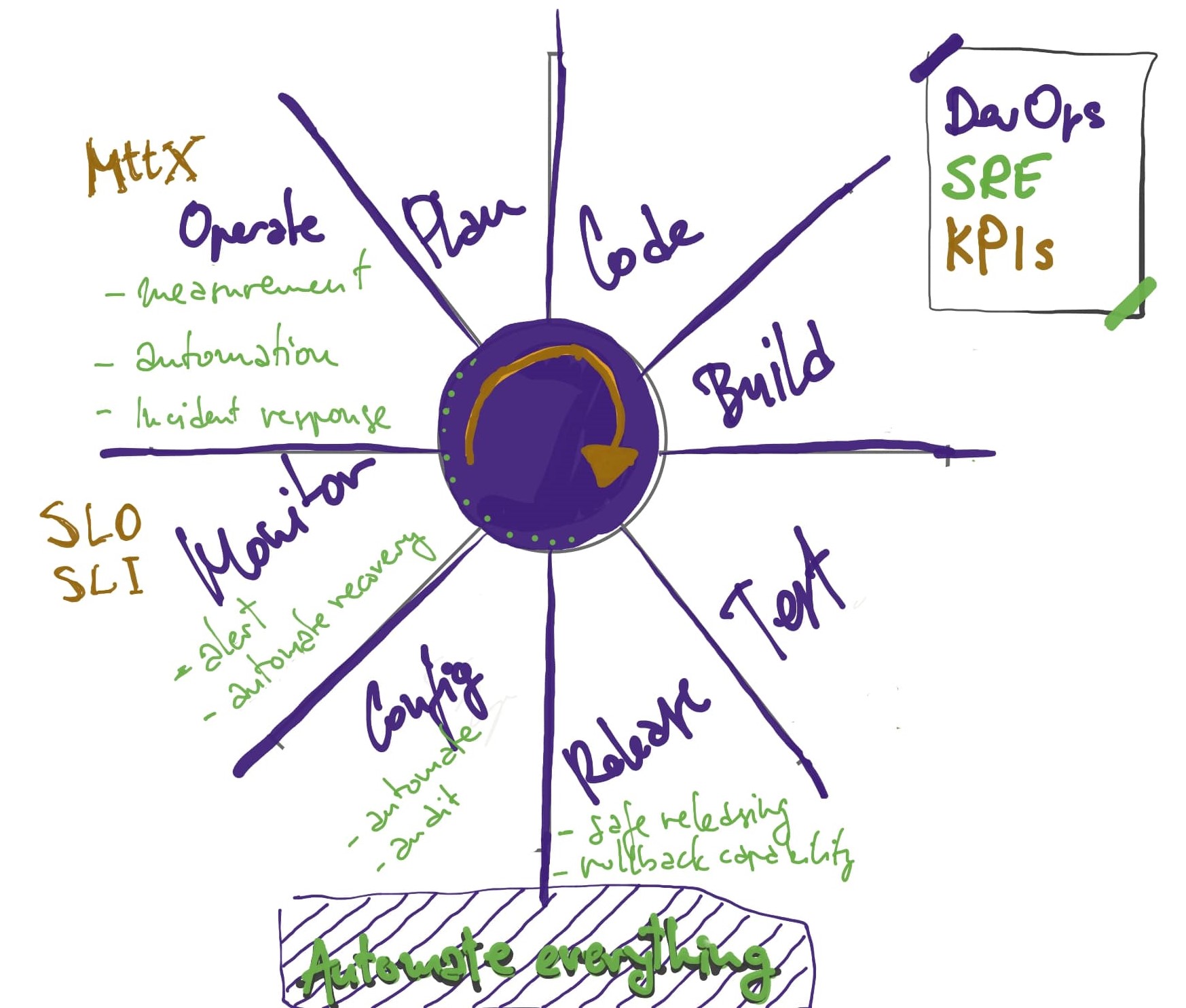

In the picture: SRE uses DevOps to dive deeper into the live system’s reliability and observability

In the picture: SRE uses DevOps to dive deeper into the live system’s reliability and observability

Having a broad spectrum of tools, giving us solution components for most of the problems we are facing is sort of a blessing, but it is really putting a lot on your development team. Owning the code, building, deploying, and operating is good for the quality and speed of delivery of any software, but building all the tools and capabilities needed to be successful in that might be too hard for your team.

Cognitive load - Cognitive load in general represents amount of intellectual effort you have to invest in a certain topic in order to either perform tasks, learn how to perform a task, or form long term knowledge about it. Quite often it is all of the mentioned in some short sequence or in parallel. Level of cognitive load is in direct correlation with (oh, what a surprise) intellectual performance. Take care of cognitive load of your team.

Fun and quite high quality read on the topic (and many more) - Team Topologies

About technical enablement

We have to acknowledge that, although we can easily agree that tooling was always a factor in failing to do the “full DevOps,” it is cognitive load that plays a big part in it, if not even a major part. It turns out that, even if it all fits together pretty well, and it is a practically proven technique to fix the velocity of your team, the team can’t always keep pace with developing new features on your software and solutions around all tooling and non-functional requirements.

Integrating all parts of the software lifecycle into one seamless flow of delivery that radiates feedback and assures smooth operation is not magic, it is rather really hard work.

Tradeoffs are too often really costly in the long run. That is why you have to identify the point during your growth when it becomes crucial to have a team (or a person) dedicated to enabling development teams to do full DevOps.

The ultimate result might look like one of those amazing yoga flows, you do not understand how someone gets there but you see it is totally realistic to be able to do it. To have this kind of ownership over your system, all the things you had to do before, in separate knowledge silos, you have to do now as well - in a team of t-shaped expert engineers.

For this, we need to identify and provide tools and capabilities that team or teams need to do this kind of work. We have to understand what is coming, and prepare accordingly, but since we actually do not want to know and we want well-tamed chaos (weird way to spell a-g-i-l-e) to rule our lives, we need to have a solid preparation. It would, actually, be amazing if we would get some kind of solution management capability, a project foundation that can contain chaos. How lovely.

I am scratching under the surface of chaos starting from the next article.

For now, remember - this is not a finite game, it is the practice, and we are in it for a journey.

<3 DevOps.

Comments