Building scalable dynamic configuration control and circuit breaker system for microservices deployed to Kubernetes.

Capability to scale - inability to control

Cloud-Native comes with high scale, portability, standardization, and modularity. For that, it is being treated almost like a silver bullet, which proves wrong more often than you would think.

Even something of the cloud-native pedigree at the level of cloud-native microservice deployed to Kubernetes comes with a tradeoff.

Statelessness

One of the core characteristics of cloud-native microservices is statelessness, it is one of the core architectural concepts that allows it to scale horizontally to any level. If it does not contain any dynamic internal state, traffic can be load balanced to any instance, even globally between datacenters. This makes it really easy to horizontally scale. It is what made container orchestration at scale possible on this level.

The tradeoff

It also makes us jump the hoops if we want to dynamically manage the state of our service. The state of our service is minimal, and, same as the service itself, distributed to a multitude of small encapsulated identical instances, statelessness in service of immutability. If you then say that we are talking about microservice deployed to more than one data center globally, we got the full scale of the problem.

Use Cases

There are two core use cases for dynamic state management in container orchestration (Kubernetes):

- Dynamic configuration management

- Circuit breaker

General config control

Controlling the configuration on the application level, or maybe some other structural level like sub-application, market, Kubernetes cluster (as in the environment) or Kubernetes namespace is the core use case here. It covers things like:

- Feature flags

- Integration point configuration (URLs, api keys, etc)

- Encryption keys

- etc

Full spec would probably include the dashboard which allows overview and control of system configuration. Ideally, it should be flexible in format to a certain extent.

Circuit breaker

A tool that controls the flag switching something on and off in your system based on external factors is called Circuit Breaker. The external factor is usually a high error rate or some other type of service quality deterioration. Additionally, flags can control some other type of system functionality regulation.

The circuit breaker is configuration control in the sense that you are controlling a flag. It is obviously simpler in terms of config format.

On the other hand, the application needs to be programmatic and its interface should allow control through some kind of trigger, most usually system alert of some sort (Grafana or Prometheus alert triggering a webhook?).

The problem

Our system is a microservice deployed to Kubernetes in multiple clusters, which makes it

- Containerized

- Stateless - means deployed with a certain state and unchanged by request passing through it

- Disposable - can live for 2 seconds or 2 days (rarely even more) and be replaced (or not) by the new one, quite usually identical

- Highly distributed

Part 1 - distribution of state

Our service is not a box that would connect to the database and then just pull the configuration - it is a multitude of smaller boxes, potentially existing in different Kubernetes clusters or different Kubernetes namespaces that all need to be in the same state always.

Having different pods in a different state would mean that the app behaves differently depending on where the request goes. That ultimately means an uneven experience for the consumers.

Session stickiness, or keeping any additional state on ingress level is a bad and really rare practice. And, realistically, we want the change to be near real-time, not wait for the database read cycle.

So, in summary - we need highly available, low latency, and scalable solution(s) that will allow us to, push the state change to all of our pods globally.

Part 2 - control of the state

Based on use cases we need the state controllable in both manual and programmatic manner.

This implies both an API and UI that allow centralized control of the system configurations at scale.

Building the solution - case study

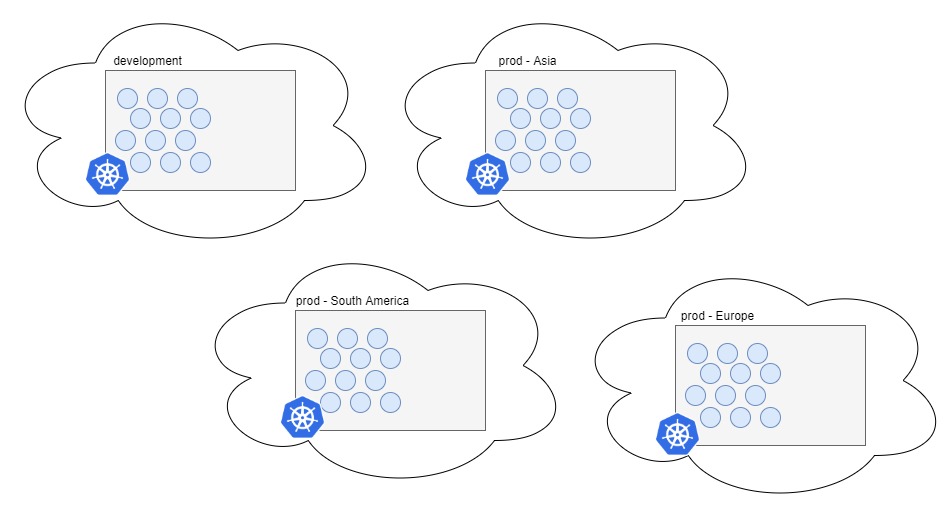

This is our microservices, deployed on 4 cloud-based Kubernetes clusters across the world.

All the pods are identical, they are deployed from the same container that is hosted in a container repo somewhere in the world (most probably in the distributed repository that allows it to be downloaded from three closest locations)

Each cluster identifies itself to the pods through environmental variable which is then used to switch between deployed config sets (like integrated service endpoints, api keys, some other specificities).

**Clarification 1: **We can not solve this problem by using the env vars - to change the env var you got to restart the pod, this is the reality of Kubernetes at the moment.

We are looking for a way to live distribute the state of the pods on the level of the higher structural entity in K8s.

So, as the first part of our solution, we want to be able to change something in the namespace and it reflects on all the pods of the same type deployed into that namespace. Then need to have this scale across the clusters.

Changing the state

Looking at the problem, we see that inside the cluster storage part the solution would be some kind of storage that allows us to observe it for changes and receive the change as part of the change event.

Now that we know the direction we want to go, I will just skip the research step for the sake of the length of this article and jump straight to what I use.

The Key-Value store

Since I am a big fan and consumer of many Hashicorp products, Consul is already in my architecture repository - this time we are using it from KV aspect.

Consul is a cloud and Kubernetes native, scalable, and multipurpose solution that, amongst other things, has a key-value store that allows you to control a flexible set of values and watch for changes on them. Dynamic configuration and feature flagging are listed as primary (ideas) use cases for Consul KV Store on its website.

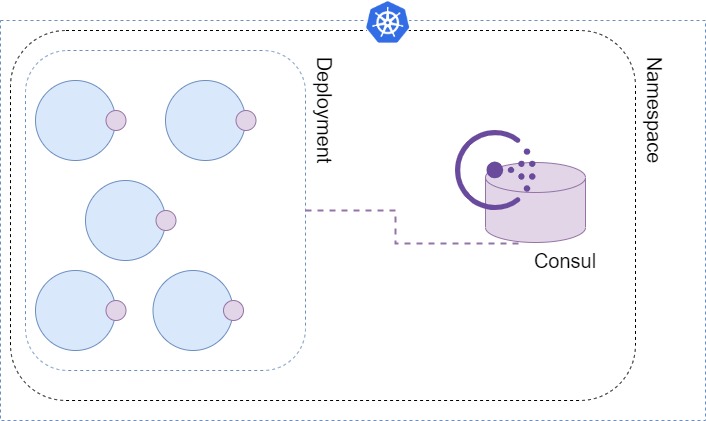

So, the plan is to use Consul deployed in our namespace directly to read values.

It is generally easy to connect to Console from your microservice and watch for changes. Most of the major programming languages used for backend development have libraries to handle this.

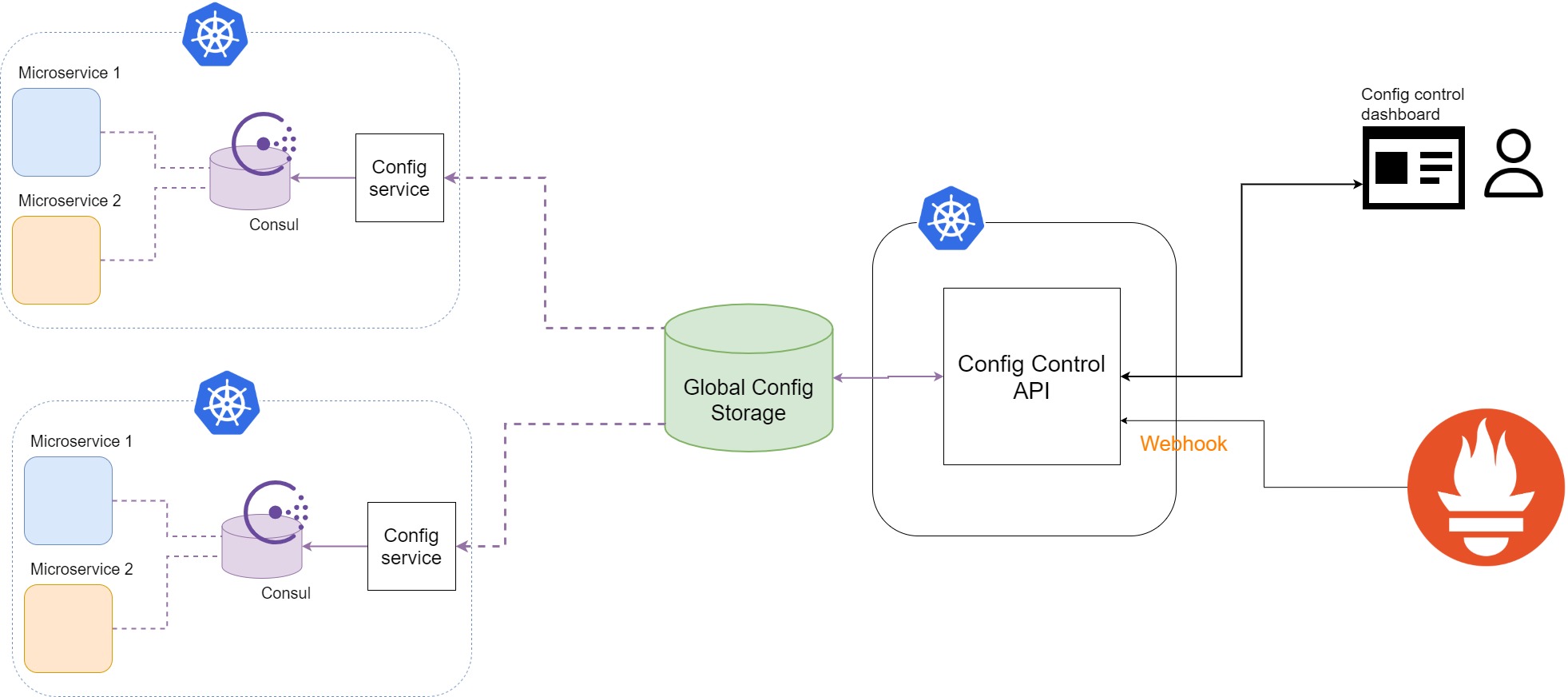

In the picture - We have Consul deployed inside our k8s namespace(s) and pods in our deployment are connected to Consul (from the microservice code).

Now, things left for us to solve are:

- How do we add and change stuff in the consul?

- How do we distribute changes globally?

- How can we make changes to config programmatically (remember circuit breaker case)?

Clarification 2: Consul does have a web interface and HTTP API which we can use, but it is rather a foundation than a solution for our case. The web interface can be used to change the configuration in one k8s namespace in one k8s cluster, and HTTP API allows the same thing through the API.

Dynamic (re)configuration

Planning how to control the content stored in Consul KV requires considering one more aspect of the problem - the level of distribution.

We have multiple (in our case 4) clusters with k8s namespaces in which our microservice (and consul) is deployed. We also want to plan for the growth across this dimension (more k8s clusters, more k8s namespaces).

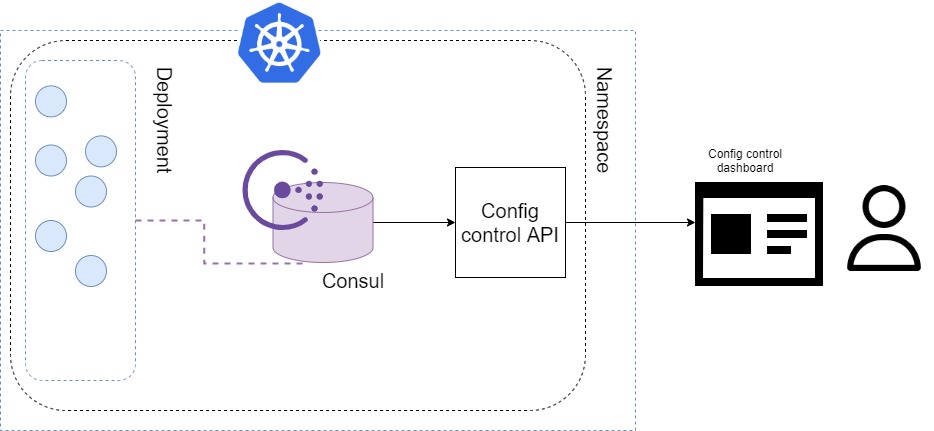

We solve this by having a central, single source of truth, a database to store configurations. The idea is to utilize Consul API, build a microservice with an API for it, and build a UI to use this API.

Right?

Wrong! In this case, API is redundant since we could have just used Consul UI. But, it is also not scalable - the only way to scale this is to deploy another one like that for every cluster and change it manually as you would anyway.

Final design - Scaling configuration

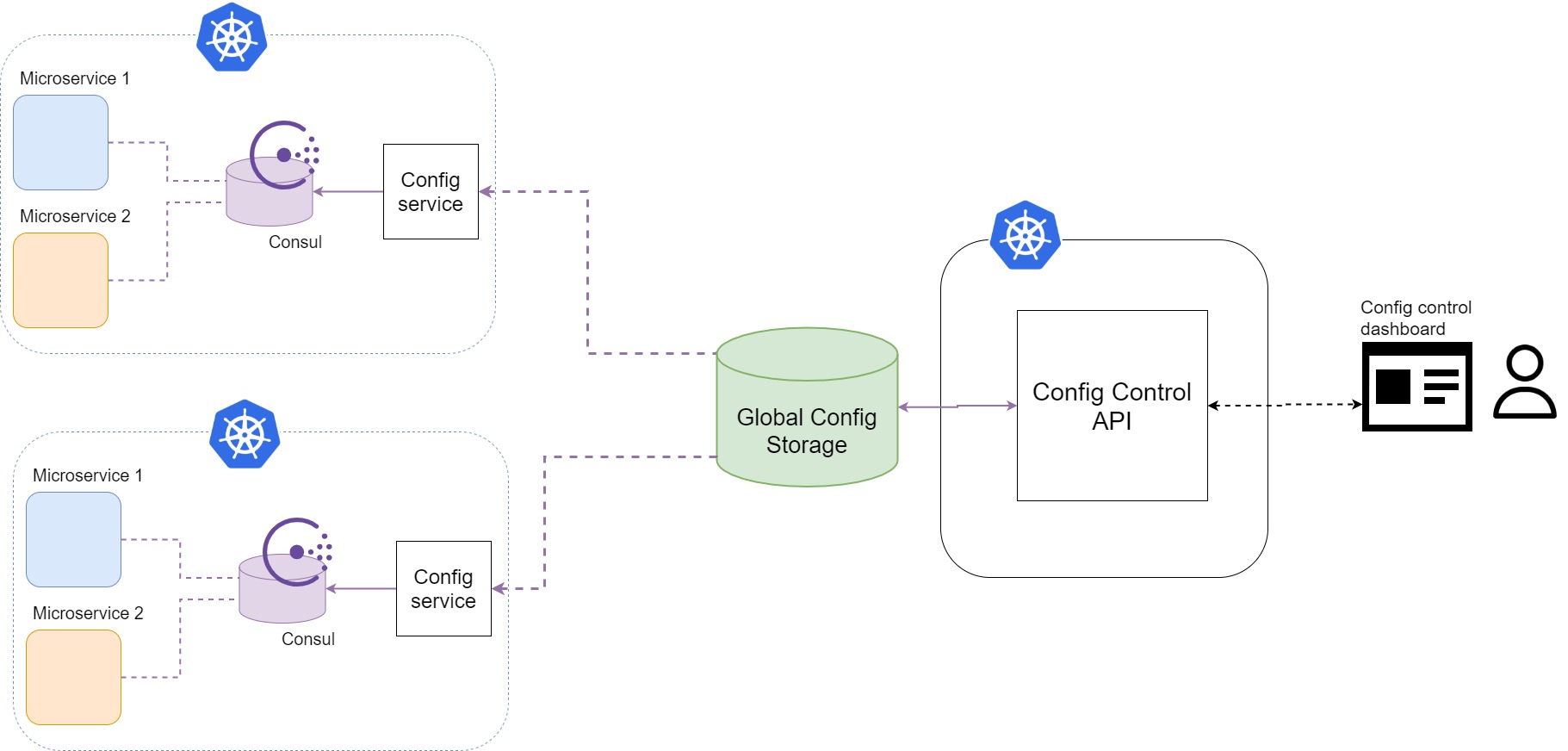

In order to scale configuration distribution, we need to build up the architecture a bit. We want to make our configuration control able ot scale across multiple k8s clusters and multiple k8s namespaces.

Above you see the final design for the scalable dynamic configuration control system.

Single source of truth - UI + API + Storage

Here we introduced the UI-API-Storage system that is the capability center for configuration management and the place where all the configuration is distributed to clusters. This is a component that is providing storage for any new namespace Consul to fetch configuration from. Generally, it does not matter where it is deployed, it will probably be in one of the existing clusters.

Clarification 2.1: Yes, theoretically, we could have chosen single central storage that every pod around the world just listens to, even the S3 bucket would do in a certain way of application. This would, however, compromise the availability of the storage to newly created/restarted pods and with that - integrity of the system. Highly available, high performance, in-cluster key-value store as storage proxy ensures the availability of configuration and better resilience of the system using it.

Additionally API gives us the flexibility of being able to do programmatic changes through it. This will be handy for the circuit breaker extension when we get to that.

Do not worry about the geographical proximity of this component, we are making sure that latency does not make too much difference, and we are building it in a way that will gracefully handle networking issues.

UI - not too much to say here, set of forms and potentially different dashboards utilizing the API component in order to make configuration easier to manage.

API will carry most of the functionality, accepting configuration CRUD and passing it to the storage in a proper way. API should also be the one handling Auth part - this system is essential security-wise.

Storage - it can be anything, really. We are not expecting it t grow too big, data structures are not too complex (the most complex case would be feature flags), so there is no need to prescribe any particular storage. Wherever life takes you cloud-wise. One good to have thing is the possibility to subscribe to events on objects or similar, this is super handy for this use case.

Config service - in cluster/namespace reconciliation

Depending on if you opted for the storage that is broadcasting change events (and if you want to use that functionality) this can be a microservice or a cron job.

Microservice would be subscribed to events coming from the storage, and every time it receives a change event on the storage it would pull the configuration object from the central storage and deploy it to the consul.

Clarification 3: In some cases, the change event contains an object that represents what changed, so theoretically you can use this to deploy the config diff. However, this approach goes against the resilience of the system in the sense that we are assuming that all the change events have reached all the clusters all the time. Assume that nothing will reach everything all the time.

Cron job would be set to a low interval (in seconds, minutes in some cases) and would just pull configuration object from storage and deploy it to Consul.

The resilience of the config management

The first precondition - nothing should be originally empty - everything should be equipped with defaults.

For the resilience considerations, let’s take a look from the perspective of one of the services we are configuring:

- Service has its own configuration and will change the configuration on consul connection and every time it observes a change in consul. If it receives nothing from the consul, or it does not connect to the consul it will have its original (default) configuration.

- Consul has a default configuration or the configuration from the last time it was updated by the config service.

- Config service will always pull the full config from the central storage and store it in Consul, if there is no connection nothing happens.

- Central service should be done the same as everything else, with cloud-native best practices in mind, and according to internal or some good external architectural guidelines - for example, AWS well-architected framework or google cloud architecture framework or similar.

Clarification 4: No partial updates of the configuration - with configuration management we are talking about transferring a really small amount of data (if that changes, optimize accordingly), so I fear more data corruption than long download times.

The most important conclusion - configuration can be outdated but we need to make sure that we always have some configuration and that it is not corrupted.

Implementing the circuit breaker

Now that we came to a robust and scalable config control solution that involves us building some software ourselves, let’s use that to our advantage and build a nice Circuit breaker.

Use Case

We want to implement Circuit breaker capability on top of the newly designed Config Control solution.

- It should have the ability to manage multiple binary (true/false) flags for circuit breaker control (check, it is already baked into the config management solution)

- It has to have the ability to programmatically trigger the config change (receive an alert)

Receiving an alert

The amazing thing about having few components that we have built here is the flexibility we get with it.

On the picture above you can see that architecturally, what we did is, we added just one more API consumer.

I am using Prometheus as a monitoring and alerting tool of choice because I have used it in practice for the same thing, but most of the modern monitoring tools have the same functionality.

The functionality you need is the usage of generic/custom webhooks. This would allow you to develop a logic on one HTTP API (not necessarily part of your REST API) that reacts to the triggers from the Prometheus backend and switches the config.

On and off - a bit about implementation

When you receive an alert from the monitoring system (or alert manager), you would program the system to, based on parameters passed change the state of the system. State change is usually turning something off in order to recover from the failure happening, switch to some other integration, degrade the service to maintain the performance, etc.

Recovering the system to its original state, however, is not that straightforward. You will, in most cases, be able to configure the system to send you the signal when the alert state was resolved. In the case of Prometheus, you can set send_resolved to “true” in the webhook config and it will do exactly that. My article on monitoring microservice data flows amongst other things covers the area of alerting thresholds.

This makes it easy to just switch back, but in most cases, you will need a human hand in confirming that everything is, indeed back to normal. The reality is - you had a kind of outage and it was caused by something, maybe you… There is a whole lot to do around detecting frequent circuit breaker triggers and reviewing service health.

It is an operationally complex matter and should be handled on a case-to-case basis.

Security

With all the flexibility that comes with building your own enterprise-grade software solution comes a need for enterprise-grade security.

Not too much advice I can give here beyond the usual.

Auth - Make sure you got API-level authentication and authorization on the whole thing. For the scalability of a solution, you want to have the flexibility of multiple roles/user types. So, you should be able to segment the authorization - the best way is RBAC.

Take care of the cloud security of your app - I write about this, other people write about this better than me, also Google/Microsoft/AWS/IBM writes about this on their own solutions side. Not in this article.

Software side security - do what you need to do for your app to be secure. Please?

The modularity of the solution - how to use it for your particular case

You can use this solution partly or in full, depending on your system’s needs, you can pick a part that you need.

- If you just want to manually configure a Kubernetes deployment in one cluster, you can just use Consul. It is the core of cluster data management and it has its own UI.

- If you want to only do a circuit breaker, you need an alert receiver that talks to Consul and apply config changing logic based on the received alert. You do need to build a microservice, but you do not need storage and a centralized system with the UI. You can just have an alert receiver in every cluster and send alerts to the multiple places f needed.

- If you want the mobile app to control this, it is really about just building a mobile app since you already got REST API, but please think twice… talking about a waste of time.

Summary

This is one of the ways to build dynamic configuration control system and a circuit breaker for Kubernetes deployed microservices.

The solutions based on this or similar/older versions of this design are running in production for few years now. During this time they have been tested on the high scala and business critical systems.

I consider this approach one of the best examples of what handling the scale should always be about.

If you decide to use this solution or a part of it, I would like to hear about the results.

Thank you!

Comments