In this article, I am working through blueprinting the security aspects of cloud-native software solutions and some general security recommendations. The touchiest, often disliked by the engineering teams (more because of the process and way enterprises do it than for any really meaningful reason), counter-intuitive in places, and ever-haunting. Love it or hate it, if you are delivering any kind of software, it is part of your life.

This article is a continuation of my blueprinting series that is aiming to provide broad insight into NFRs of cloud-native software solutions.

You will read about:

- How to approach software system security.

- Security influence on software architecture.

- Cloud security considerations and approaches.

- Kubernetes security considerations and approaches.

- The principle of least privilege.

About internet system security - the upside down

Getting into the security discussions needs a certain mindset shift. Usual product-lead discussion, asking your consumer about their wishes and needs does not actually work when working on security - you need to take a completely different perspective.

The most important player in the game of security is the attacker. This persona is an unpredictable, unconstrained entity that will try LITERALLY ANYTHING, constantly change entry vectors and approaches. You also have to believe that there is nothing an attacker would not try and remember to try, don’t count on anything but the worst.

Covering the security of web systems (publicly accessible one) is hard and detailed work that requires lots of redundancy. Security practice should not drive simplicity (unlike code that should be DRY and simple) - it should create many problems for any attacker.

So, based on that, let us review what we have, go through some recommendations on architecture and move into the in-depth security concepts.

Architecture-based security recommendations

From a security perspective, on the architecture side, we are talking more about the influence on the design than application on top of the design - the best way is to take the security-first approach and design everything with security in mind.

When taking a look at the aspect of this particular design we have just a few points to consider. Let’s look at the diagram of the basic infra footprint with security consideration points marked.

In the picture - Most of the security considerations are related to connections between different systems, or implied by them.

Sometimes the solution for the particular problem may differ depending on hosting setups, but conceptually it is always the same.

While reviewing the architecture design, our focus are implications of design, we will leave other security aspects, like access handling, for later.

Hosting setups; there are currently few prominent approaches used for hosting setups, in most of the cases sometimes based on the business compliance requirements, or more often on how old is the general unit under which you are working on (company, department), what were the historical setups, etc. At the current moment we can usually land in one of the following:

- Everything on a single cloud solution/provider

- Everything on-premise (own in house data center or rented datacenter)

- Multiple cloud solutions (hybrid cloud)

- Cloud - on-prem combination

- All of the above :)

Malicious attacks

Malicious attacks can aim to:

- Steal data

- Exploit database to find out more about your infrastructure and perform more complicated attack

- Just cause disruption of service by damaging the performance of DB.

- Just about anything we can or can not imagine.

Datastores

The simplest secure connections to solve are to databases. Depending on the database setup/hosting there might be some variations and different quirks and benefits but the concept is the same across this space.

There are two basic approaches:

- Connection limitation where connection stays public but is limited just to communication between app and database.

- Connection encapsulation where a virtual network is created to completely protect the traffic between components.

I do not recommend ever using the first one unless you have absolutely no choice. Your database is publicly opened for any kind of malicious attack and even if access to your DB is well handled, malicious attempts do not have to be related only to getting the data or exploiting it.

The best solution is to use at least a second option, or the combination of two, where apart from a virtual (or real physical) dedicated network we apply a network policy/access list that strictly controls which components and/or network address has access to the component.

In both AWS and GCP, for example, you have an option of using many tools and the best architected and secure architecture would use route tables, Network access control(ACL), and Security groups in order to have full control over what accesses which resource.

Kubernetes deployment

If you need more granular control over access to Kubernetes resources, the best way to have full control is using Network Policies.

Network Policy gives us the ability to in-depth configure access to our resources with good flexibility. Through Network Policies we can control ingress and egress traffic by (not a complete list) :

- Resource identification

- IPBlock (defined by CIDR with possibility of exception)

- Role

- User

I highly recommend using network policies in Kubernetes in combination with network peering or in case of open internet communication with certain components.

Network peering

Network peering is the process of creating one hybrid private network from two separate private networks. These private networks, or virtual private networks (VPN) to be more precise, are called differently in different cloud systems, and so is peering, but the ultimate purpose is the same. We are doing it to connect components through a protected network of networks that encapsulates all the traffic and all the components inside of it, protecting it from outside influences, etc.

Whatever approach you are taking you will be creating a private network between systems that are hosting Kubernetes and the database and that will result in a safe connection without any outside access to it.

The nuances are related to the way components are hosted, and I will quickly sum the most usual cases here:

- Kubernetes and the database are hosted in the same cloud system - in this case creating virtual private networks, or virtual private clouds and connecting them would be simple, and it would also bring the benefit of using the internal cloud solution network with a positive impact to performance.

- Kubernetes and the database are hosted in different cloud systems, or in a combination of cloud and on-prem infra - in this case, connection can be established through VPN setup that would create needed private networking, but would ultimately impact the performance of the connection.

- Hosting the database in Kubernetes - still an option in some cases. It would ultimately mean that you would be mounting storage to Kubernetes and host the database as a deployment/stateful set that utilizes this storage. In this case, it would be a matter of securing access to storage, which is again about either mounting a cloud drive through virtually connected networks or within the same cloud. You are just shifting from securing database connection to securing connection to the data storage (hard drive).

Ingress

Securing ingress traffic has few components to it:

- Brood force attacks (DDoS) protection

- Script (bot) protection

- Application structure/technology related

When planning any application facing the public internet, serious attention needs to be paid to provide at least basic protection from brood force attacks. Depending on the nature of your application and API endpoints there will be different approaches and different needs.

Sensors APIs ingress

Traffic on this side is 100% unidirectional, and thus we would expect only two types of malicious attacks:

- Data pollution - where someone would send fake and corrupted data with the goal to

- Pollute the data and disrupt the service quality

- Discover potential entry vectors

- DDoS - hitting the APIs with high load in order to bring down the system

Data pollution/exploit attack is usually solved on the software side by validating data on entry and taking special care to apply the best and most secure practices in regards to API. In this case, there is either no need or just a need for a single response (data received) - which means api should always give the same response or not give a response at all.

Make sure that your interfaces are responding with a minimal amount of cleaned up and validated data across all types of responses and that various types of exceptions are only visible in system logs and other protected data accessible only to your team. Be secure even in failure.

System responses on any interface (UI, API..) will always be on the front line of defense against malicious attacks. Attackers will quite usually try to, by applying different changes and requests, cause the system to respond with a change of state (exception, data dump, info) that can be used to further manipulate the system in order to gain access or cause damage in other ways. If any part of your system through this exercise gives the attacker enough information for them to advance further in their efforts, this becomes an entry point, also known as a vulnerability or an exploit.

DDoS attack protection usually has more components:

- Kubernetes ingress

- On the cloud solution’s edge server - some cloud solutions offer DDoS mitigation as part of a shared responsibility package.

- On the edge solution of choice - if you are using CDN and it has an option of handling DDoS attacks

Web and mobile app API ingress

This type of ingress has one additional type of attack it will endure - functional attack by bots or individuals. It is more critical for this API because this API’s main purpose is to respond to different requests, so there is no simple protection. Literally every interactive system on the internet is hit by some kind of functional attack, and especially by automated scripts or bots trying to exploit functionalities in different ways.

The full protection of this kind of attacks spans through the whole security structure, but in our case, we can plan two important basic setups that will help mitigate these attacks:

- API Gateway setup for ingress traffic with capabilities like API keys, rate controls per consumer etc

- Web application firewall (WAF) set up for protection from most known malicious attacks (X-injections, scanners, probes, scriptings etc)

Apart from these two, we need the same protection on this API as recommended on sensors APIs.

Accessing stuff

The system we are building has only one connection to a truly third party system, but it is quite an important one - the one of user authentication service is from some SaaS provider.

Generally, the advantage of using this approach is that security is almost fully handed over to a specialized third party. The disadvantage, however, is that security is almost fully handed over to a specialized third party - we have to be super careful about access.

One thing we have to take care of here is any potential secrets (like usernames, passwords, and keys) that we would use in our app in order to access this service. Any data of this type should be picked up by the app from the Kubernetes Secrets or even from the key management system directly. This way no secrets will be in unsafe places, or rather a lot more unsafe places than Kubernetes Secrets.

How safe are Kubernetes secrets? They are safe to use, they’re as stable as Kubernetes, you will always be able to access them and Kubernetes has a seamless mechanism to mount secrets to your pod. However,altho safe, they are not too secure in nature :) - Kubernetes secrets are base64 encrypted and are opened for easy access to anyone who has access to that aspect of Kubernetes. So, you need to take care of your Kubernetes access and permission structures properly. Base64 encoding of the secrets is not done for security reasons, but for transport reasons - base64 ensures the integrity of data in transport.

Security setups and processes

Diving deeper into security considerations, I will focus more on the process and parts where human error has lots of influence - access/permission setup, process and secrets management.

Secrets

As mentioned above, your applications should be connected to a centralized place to read secrets from, that place should be safe and secure and secrets should be rotated.

It is absolutely unacceptable for secrets to be stored in the code or some other similarly readable place. Code and documentation are one-touch accessible data, which means that the point for them is to be super accessible to anyone related to building your product, so keeping secrets there is directly contradictory to the point of making it hard to see a secure date.

There are multiple different approaches you can take but let’s boil it down to a few most usual cases:

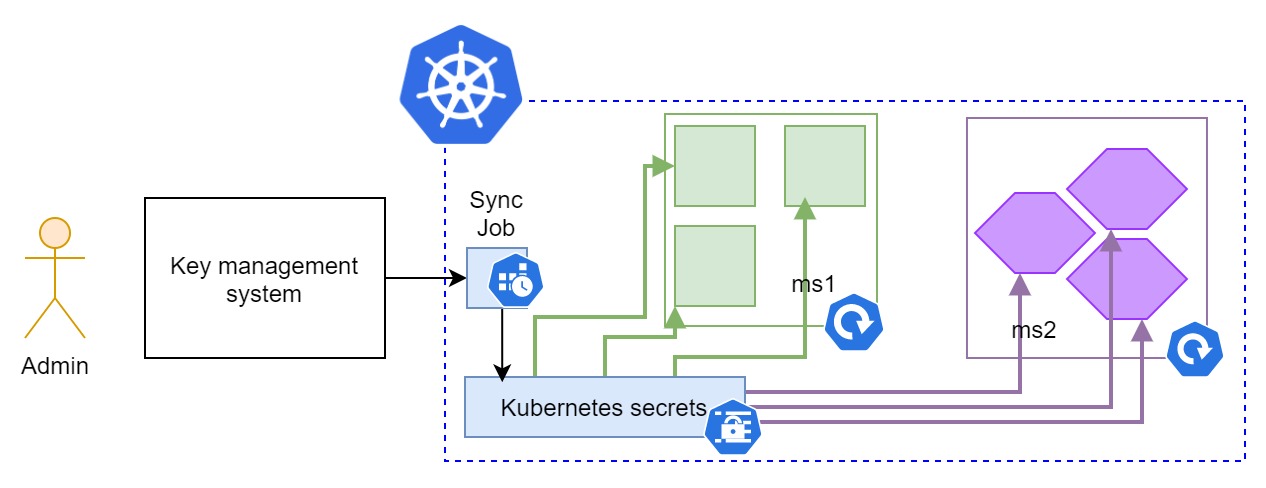

- Using third-party/cloud KMS solution (AWS KMS, Fortanix, GCP CKM etc) where we got 2 types of approaches depending on architecture, preferences, scale etc.

- Secrets are read directly from the secrets repository - less performant and less secure variant, but still acceptable.

- Secrets are read from immediately available medium (not from the code) and synced into that medium from the secrets repository (key management service - KMS).

- Deploying a self-hosted solution (Hasicorp Vault for example) which has lots of benefits if you got a certain scale, complexity, and team to manage this. Comes with its own bag of problems to solve.

Third-party solution (or self-hosted separately)

In the case of directly accessing secrets from KMS, pro is simplicity of architecture, con is simplicity of architecture, scalability issue in container orchestration, and less security. Let’s quickly analyze:

- You will not have to build any additional solutions, and you will just keep pinging KMS every time you boot your app in order to fetch the secrets.

- By doing this you need to have a secret for accessing KMS stored somewhere, or your app on the open-access list for KMS

- If you are running high-scale microservices, with hundreds or thousands of pods, you will be generating lots of load to KMS service on every container respawn, and in that case, we would have to care about the architecture and scalability of KMS, instead of just its security.

When secrets are read from some directly available medium, you have quite a few advantages to the first case, but you have to do more work and maintain more components - which is also a positive thing if we are adhering to our many-hurdles principle:

- You will be using Kubernetes secrets and mounting them to environmental variables of your pods, which makes this lot more scalable and resilient solution - there is no problem between attaching 10 and 10k pods to the same secret, and there is no dependency on connection to KMS.

- You will have a job (most usually) or microservice, syncing secrets from KMS to Kubernetes secrets, which will provide additional space to build a wall around and make it harder to reach a single source of truth for the data we are talking about.

- KMS is completely invisible to the publicly exposed system, which means that the attacker needs to first gain access to your app, then to your job, and then to KMS, which should also be hard since the job would have just a read access through the api and secrets would be rotated often.

Self-hosted KMS

In any case, the self-hosted solution will require you to deploy and maintain the solution that will hold and manage your secrets. If you decide to do this, make sure that your architecture and future plans justify it.

Using Vault as an example, you will be able to deploy it inside Kubernetes using Consul as the foundation, which gives you good scalability, service discovery capabilities, and highly accessible secrets in distributed manner while keeping it as secure as it gets. Additionally, it will make it possible to distribute this key management to other hosting places as well.

However, you will see how deep the rabbit hole goes when you start managing the scale and availability of this solution along with its accessibility on the network, service discovery etc. These setups require time and commitment, along with a lot of trial and error situations. If you decide to go for this, be patient with your team.

The principle of least privilege

One of the essential things for securing the foundation of your project is getting access to everything right.

There is only one way for that, and it is the principle of the least privilege.

Anyone on the system should have minimal access rights (privileges) that enable them to perform their tasks. Which quite often reads as - USE RBAC

Roles

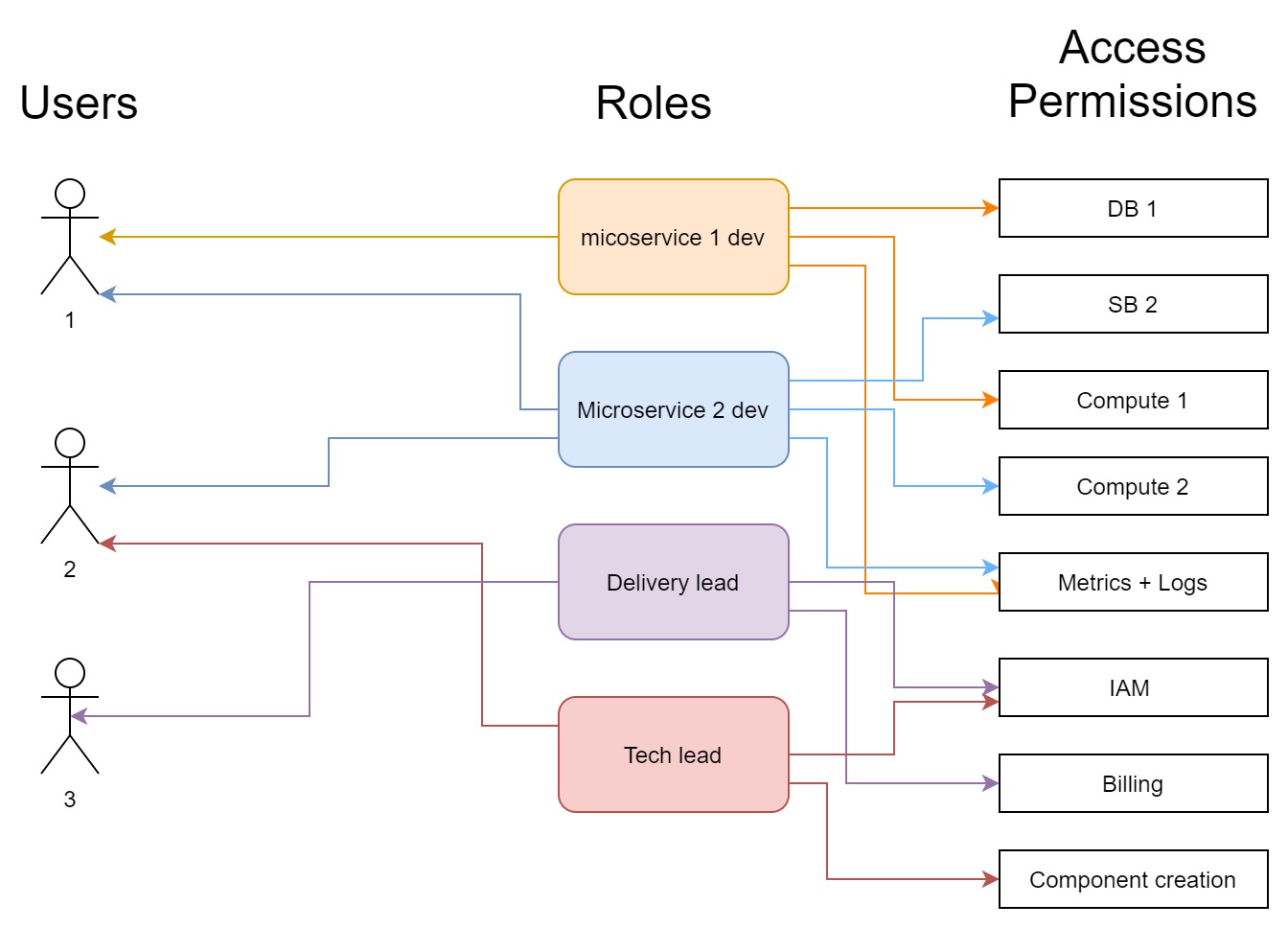

The best way to have set up based on the least privilege principle is to create roles in your system. Implementations may vary, so let’s take a look at two things - Kubernetes approach and the idea for the cloud approach.

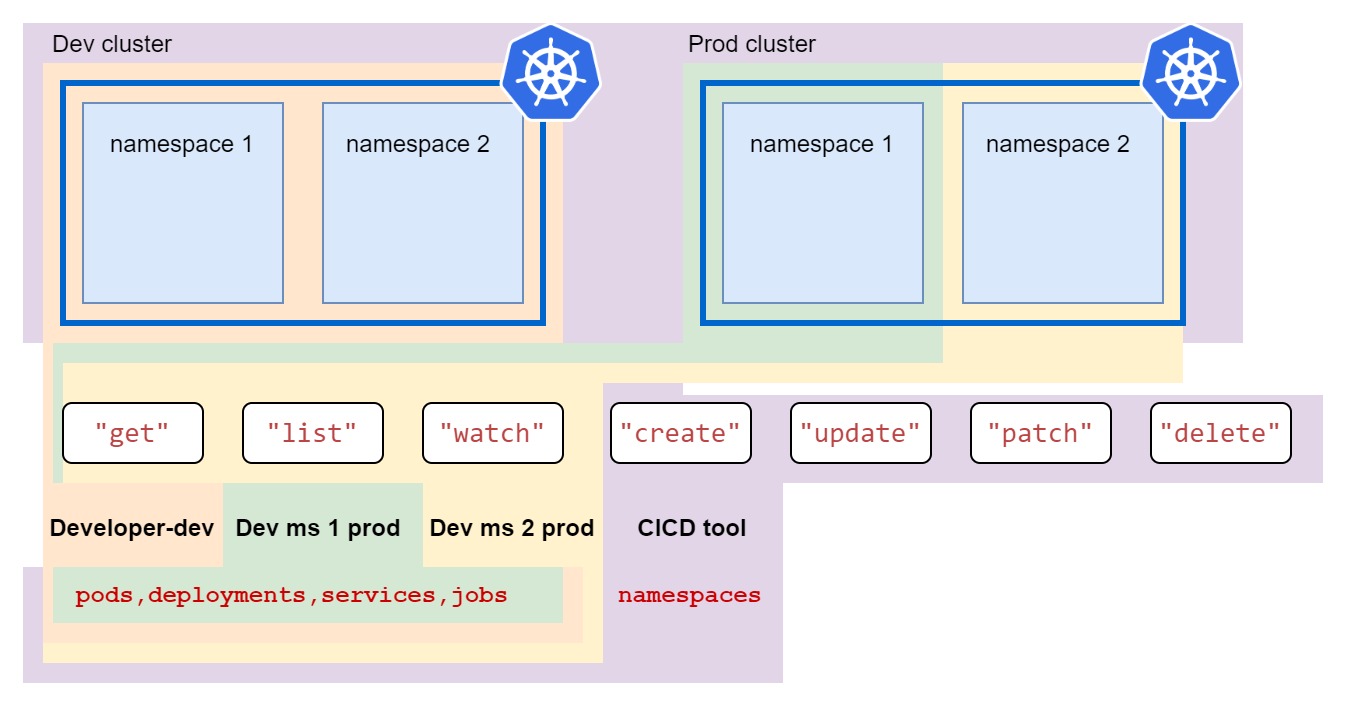

Kubernetes access

In Kubernetes, it is important to set up a proper access structure before starting anything else. So, as soon as your cluster is set up (or even before) you should have Kubernetes API objects for cluster access roles ready.

Kubernetes has RBAC (Role-Based Access Control) set up as default, so do not touch anything there, just implement your pre-designed roles.

Setting up RBAC in Kubernetes is easy, and example object from documentation looks like this:

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: default

name: pod-reader

rules:

- apiGroups: [""] # "" indicates the core API group

resources: ["pods"]

verbs: ["get", "watch", "list"]

The above role allows the entity that it is bound to execute “get”, “watch” and “list” on pods in the default namespace.

Using other API objects this role can be bound to the user who then gets the role of pod-reader and can have fun reading the pods.

For more real-life example refer to the following diagram:

Cloud access

When working with cloud solution, the best approach is designing the roles/groups in case to case manner. The flexibility of cloud usage and the many ways teams are using it implies that there is only a couple of guidelines I can provide here.

Access needs should be carefully evaluated, discussed with the technical lead, system architect, potentially anyone organizing the development process.

In regards to designing and creating permissions/access structure, GCP and AWS both have pretty convenient Enterprise-grade IAM (Identity and Access Management) and Azure is in quite a good place with its AAD (Azure Active Directory, a next-gen Active Directory)

You should end up with access roles designed based on your enterprise architecture, team structure, and development process (influences in that order).

Careful observation of access needs should be essential for establishing a role structure for your system. A simple role system should be something like the following role chart:

Security in release process

Coming up soon there is a whole article in this series just talking about releasing and managing lifecycle of the software, so without going too deep ito te CICD:

- You need static code scans. Yes, they are quirky, full of false positives and noone likes them, but spend time on ironing out false positives and integrate it into your release process - it is valuable for overall security of your system.

- Always have peer reviews.

- Have someone in the team that champions security - it is not full time job, but it is someone who takes interest in security of what your team is building, and has certain focus on putting additional pair of eyes on code and architecture reviews. Chose thsi person wisely.

Some additional recommendations - tightening security

General/Cloud - security process

- Make people rotate their passwords often

- Setup strong password policies

- Use and make MFA mandatory where ever possible

K8s - Security context

Both pod and container can ave their securityContext set up in the deployment (or pod) manifest.

Generally there are three things you can do to enhance the security of your k8s deployments to avoid as many possible exploits that can come from Kubernetes or kernel level bugs and give access to your pods to malicious users.

Run as non-root

You should always run the pods as a non-root user. Running pods as root user gives too much power to whoever has access to pods.

This is achieved through runAsUser setting in in securityContext.

Read only root file system

Root file system should always be read only to any pod. Pods having write on root filesystem is first thing someone gaining access to pods would try to exploit.

This is achieved through readOnlyRootFilesystem setting.

Stop privilege escalation

As you may imagine, stopping any user from gaining any additional privilege is quite a security priority, so let’s put focus on that as well.

This is achieved through allowPrivilegeEscalation setting.

Example

apiVersion: v1

kind: Pod

metadata:

name: security-context-demo-2

spec:

securityContext:

runAsUser: 1000

readOnlyRootFilesystem: true

allowPrivilegeEscalation: false

containers:

- name: sec-ctx-demo-2

image: gcr.io/google-samples/node-hello:1.0

securityContext:

runAsUser: 2000

Security Policy

Security Policy setup allows lot more additional control to pod security. For the sake of length of the article and covering just essential best practices I am leaving you with the link to the documentation:

https://kubernetes.io/docs/concepts/policy/pod-security-policy/

Summary

- On the architecture level security is the influence and not the upgrade - architecture should be done with security in mind.

- The principle of least privilege is the golden standard of identity and access management (IAM)

- Simplicity and DRY do not work in application security. With security more is more - make it robust and ultimately hard for the attacker to achieve anything.

- You are never done with securing your system.

Comments