Services and networking — from ClusterIP to Ingress

As you are making your way through all the stages of your app’s development and you (inevitably) get to consider using Kubernetes, it is time to understand how your app components connect to each other and to outside world when deployed to Kubernetes.

Knowledge you will get from this article also covers “services & networking” part of CKAD exam, which currently takes 13% of the certification exam curriculum.

Services

Kubernetes services provide networking between different components within the cluster and with outside world (open internet, other applications, networks etc).

There are different kinds of services, and here we’ll cover some:

- NodePort — service that exposes Pod through port on the node

- ClusterIP — service that creates virtual IP within the cluster to enable different services within the cluster to talk to each other.

- LoadBalancer — creates (provisions) load balancer to a set of servers in kubernetes cluster.

NodePort

NodePort service maps (exposes) port on the Pod to a port on the Node. There are actually 3 ports involved in the process:

- NodePort service exposes deployment (set of pods) to the outside of the k8s node

- targetPort — port on the Pod (where your app listens). This is optional parameter, if not present, Port is taken

- Port — port on the service itself (usually same as pod port)

- NodePort — port on the node that is used to access web server externally. By standard, NodePort can be in the range between 30000 and 32767. This is optional parameter, if not present, random available port in valid range will be assigned.

Creating the NodePort service

apiVersion: v1

kind: Service

metadata:

name: k8s-nodeport-service

spec:

type: NodePort

ports:

- targetPort: 6379

port: 6379

NodePort: 30666

selector:

db: redis

Linking pods to a service

Selectors are the way to refer (link) service to certain set of pods. As set of pods gets selected based on the selector (in almost all cases, pods from same deployment), service starts sending traffic to all of them in random manner effectively acting as load balancer.

If mentioned pods are distributed across the nodes, service will be created across the nodes to be able to link all the pods. In case of multi node service, service exposes same port on all nodes.

ClusterIP

In case of application consisting of multiple tiers deployed to different sets of pods, way to establish communication between different tiers inside the cluster is necessary.

For example, we have:

- 5 pods of API number 1

- 2 pods of API number 2

- 1 pod of redis

- 10 pods of frontend app

Each of above mentioned 18 pods have their own distinct IP addresses, but making communication that way would be:

- Unstable since pods can die and be recreated with new IP any time.

- Inefficient since we would have to load-balance within the integration part of each app.

ClusterIP service provides us with unified interfaces to access each group of pods — it provides a group of pods with internal name/IP.

apiVersion: v1

kind: Service

metadata:

name: api-1

spec:

type: ClusterIP

ports:

- targetPort: 9090

port: 9090

selector:

app: api-1

type: backend

ClusterIP is default type of service, so if service type is not specified, k8s assumes ClusterIP.

When this service gets created, other applications within the cluster can access the service through service IP or service name.

LoadBalancer

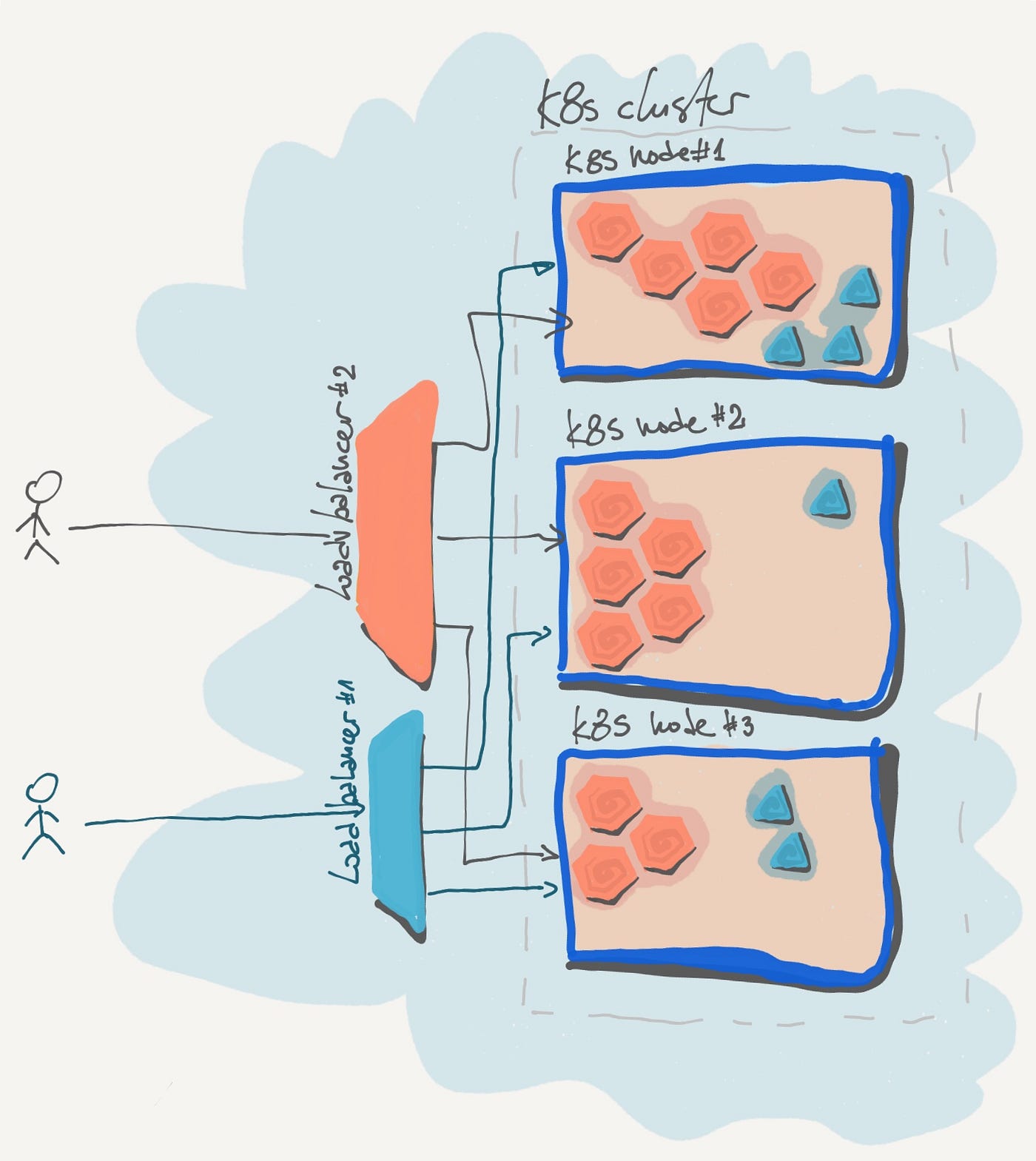

In short, LoadBalancer type of service is provisioning external load balancer in cloud space — depending on provider support.

Deployed load balancer will act as NodePort, but will have more advanced load balancing features and will also act as if you got additional proxy in front of NodePort in order to get new IP and some standard web port mapping (30666 > 80). As you see, it’s features position it as the main way to expose service directly to outside world.

Main downside of this approach is that any service you expose needs it’s own load balancer, which can, after a while, have significant impact on complexity and price.

Let’s briefly review the possibilities:

apiVersion: v1

kind: Service

metadata:

name: lb1

spec:

externalTrafficPolicy: Local

type: LoadBalancer

ports:

- port: 80

targetPort: 80

protocol: TCP

name: http

- port: 443

targetPort: 443

protocol: TCP

name: https

selector:

app: lb1

Above creates external load balancer and provisions all the networking setups needed for it to load balance traffic to nodes.

Note from k8s docs: With the new functionality, the external traffic will not be equally load balanced across pods, but rather equally balanced at the node level (because GCE/AWS and other external LB implementations do not have the ability for specifying the weight per node, they balance equally across all target nodes, disregarding the number of pods on each node).

If you want to add AWS ELB as external load balancer, you need to add following annotations to load balancer service metadata:

annotations:

service.beta.kubernetes.io/aws-load-balancer-backend-protocol:"tcp"

service.beta.kubernetes.io/aws-load-balancer-proxy-protocol:"*"

Ingress

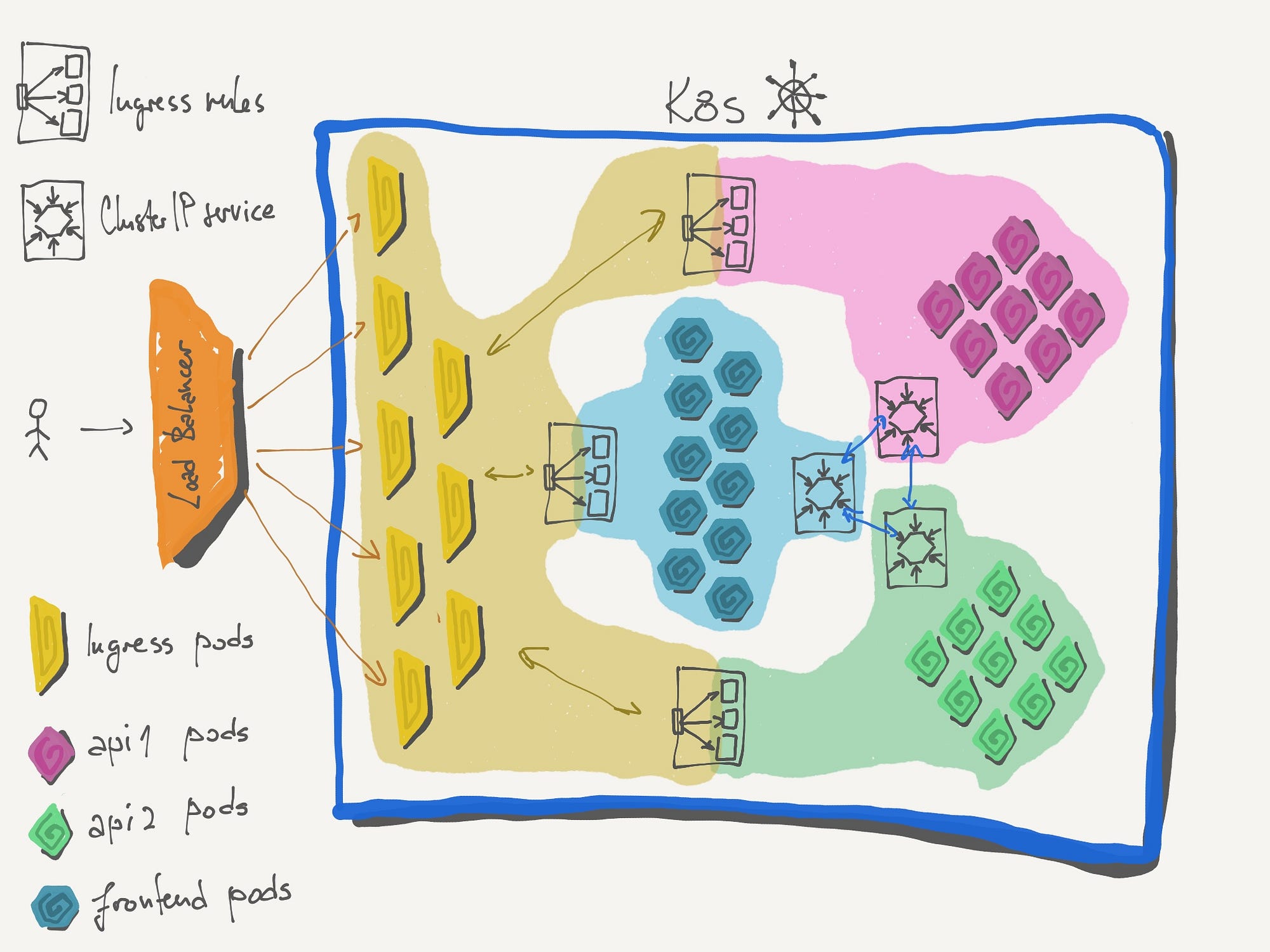

Real life cluster setup

When getting into space where we are managing more than one web server with multiple different sets of pods, above mentioned services turn out to be quite complex to manage in most of the real life cases.

Let’s review example we had before — 2 APIs, redis and frontend, and imagine that APIs have more consumers then just frontend service so they need to be exposed to open internet.

Requirements are as following:

- frontend lives on www.example.com

- API 1 is search api at www.example.com/api/search

- API 2 is general (everything else) api that lives on www.example.com/api

Setup needed using above services:

- ClusterIP service to make components easily accessible to each other within the cluster.

- NodePort service to expose some of the services outside of node, or maybe

- LoadBalacer service if in the cloud, or

- proxy server like nginx, to connect and route everything properly (30xxx ports to port 80, different services to paths on the proxy etc)

- Deciding on where to do SSL implementation and maintaining it across

So

ClusterIP is necessary, we know it has to be there — it is the only one handling internal networking, so it is as simple as it can be. External traffic however is different story, we have to set up at least one service per component plus one or multiple supplementary services (load balancers and proxies) in order to achieve requirements.

Number of configs / definitions to be maintained skyrockets, entropy rises, infrastructure setup drowns in complexity…

Solution

Kubernetes cluster has ingress as a solution to above complexity. Ingress is, essentially, layer 7 load balancer.

Layer 7 load balancer is name for type of load balancer that covers layers 5,6 and 7 of networking, which are session, presentation and application

Ingress can provide load balancing, SSL termination and name-based virtual hosting.

It covers HTTP, HTTPS.

For anything other then HTTP and HTTPS service will have to be published differently through special ingress setup or via a NodePort or LoadBalancer, but that is now a single place, one time configuration.

Ingress setup

In order to setup ingress we need two components:

- Ingress controller — component that manages ingress based on provided rules

- Ingress resources — Ingress HTTP rules

Ingress controller

There are few options you can choose from, among them nginx, GCE (google cloud) and Istio. Only two are officially supported by k8s for now — nginx and GCE.

We are going to go with nginx as the ingress controller solution. For this we, of course, need new deployment.

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-ingress-controller

spec:

replicas: 1

selector:

matchLabels:

name: nginx-ingress

template:

metadata:

labels:

name: nginx-ingress

spec:

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller

args:

- /nginx-ingress-controller

- configMap=$(POD_NAMESPACE)/ingress-config

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

Deploy ConfigMap in order to control ingress parameters easier:

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-configuration

Now, with basic deployment in place and ConfigMap to make it easier for us to control parameters of the ingress, we need to setup the service to expose ingress to open internet (or some other smaller network).

For this we setup node port service with proxy/load balancer on top (bare-metal /on-prem example) or load balancer service (Cloud example).

In both mentioned cases, there is a need for Layer 4 and Layer 7 load balancer:

- NodePort and possibly custom load balancer on top as L4 and Ingress as L7.

- LoadBalancer as L4 and Ingress as L7.

Layer 4 load balancer — Directing traffic from network layer based on IP addresses or TCP ports, also referred to as transport layer load balancer.

NodePort for ingress yaml, to illustrate the above:

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress

spec:

type: NodePort

ports:

-targetPort: 80

port: 80

protocol: TCP

name: http

-targetPort: 433

port: 433

protocol: TCP

name: https

selector:

name: nginx-ingress

This NodePort service gets deployed to each node containing ingress deployment, and then load balancer distributes traffic between nodes

What separates ingress controller from regular proxy or load balancer is additional underlying functionality that monitors cluster for ingress resources and adjusts nginx accordingly. In order for ingress controller to be able to do this, service account with right permissions is needed.

apiVersion: v1

kind: ServiceAccount

matadata:

name: nginx-ingress-serviceaccount

Above service account needs specific permissions on cluster and namespace in order for ingress to operate correctly, for particularities of permission setup on RBAC enabled cluster look at this document in nginx ingress official docs.

When we have all the permissions set up, we are ready to start working on our application ingress setup.

Ingress resources

Ingress resources configuration lets you fine tune incoming traffic (or fine-route).

Let’s first take simple API example. Assuming that we have just one set of pods deployed and exposed through service named simple-api-service on port 8080, we can create simple-api-ingress.yaml.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: simple-api-ingress

spec:

backend:

serviceName: simple-api-service

servicePort: 8080

When we kubectl create -f simple-api-ingress.yaml we setup an ingress that routes all incoming traffic to simple-api-service.

Rules

Rules are providing configuration to route incoming data based on certain conditions. For example, routing traffic to different services within the cluster based on subdomain or a path.

Let us now get to initial example:

- frontend lives on www.example.com and everything not /api

- api 1 is search api at www.example.com/api/search

- api 2 is general (everything else) api that lives on www.example.com/api

Since everything is on the same domain, we can handle it all through one rule:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: proper-api-ingress

spec:

rules:

-http:

paths:

-path: /api/search

backend:

serviceName: search-api-service

servicePort: 8081

-path: /api

backend:

serviceName: api-service

servicePort: 8080

-path: /

backend:

serviceName: frontend-service

servicePort: 8082

There is also a default backend that is used to serve default pages (like 404s) and it can be deployed separately. In this case we will not need it since frontend will cover 404s.

You can read more at https://kubernetes.io/docs/concepts/services-networking/ingress/

Bonus — More rules, subdomains and routing

And, what if we changed the example to:

- frontend lives on app.example.com

- api 1 is search api at api.example.com/search

- api 2 is general (everything else) api that lives on api.example.com

It is also possible, with the introduction of a new structure in the rule definition:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: proper-api-ingress

spec:

rules:

-host: api.example.com

http:

paths:

-path: /search

backend:

serviceName: search-api-service

servicePort: 8081

-path: /

backend:

serviceName: api-service

servicePort: 8080

-host: app.example.com

http:

paths:

-path: /

backend:

serviceName: frontend-service

servicePort: 8082

Note (out of scope): You can notice from the last illustration that there are multiple ingress pods, which implies that ingress can scale, and it can. Ingress can be scaled like any other deployment, you can also have it auto scale based on internal or external metrics (external, like number of requests handled is probably the best choice).

Note 2 (out of scope): Ingress can, in some cases, be deployed as DaemonSet, to assure scale and distribution across the nodes.

Wrap

This was a first pass through the structure and usage of k8s services and networking capabilities that we need in order to structure communication inside and outside of the cluster.

I, as always, tried to provide to the point and battle tested guide to reality… What is written above should give you enough knowledge to deploy ingress and setup basic set of rules to route traffic to your app and give you context for further fine tuning of your setup.

Important piece of advice: Make sure to keep all the setups as a code in files, in your repo — infrastructure as a code is essential part of making your application reliable.

Comments