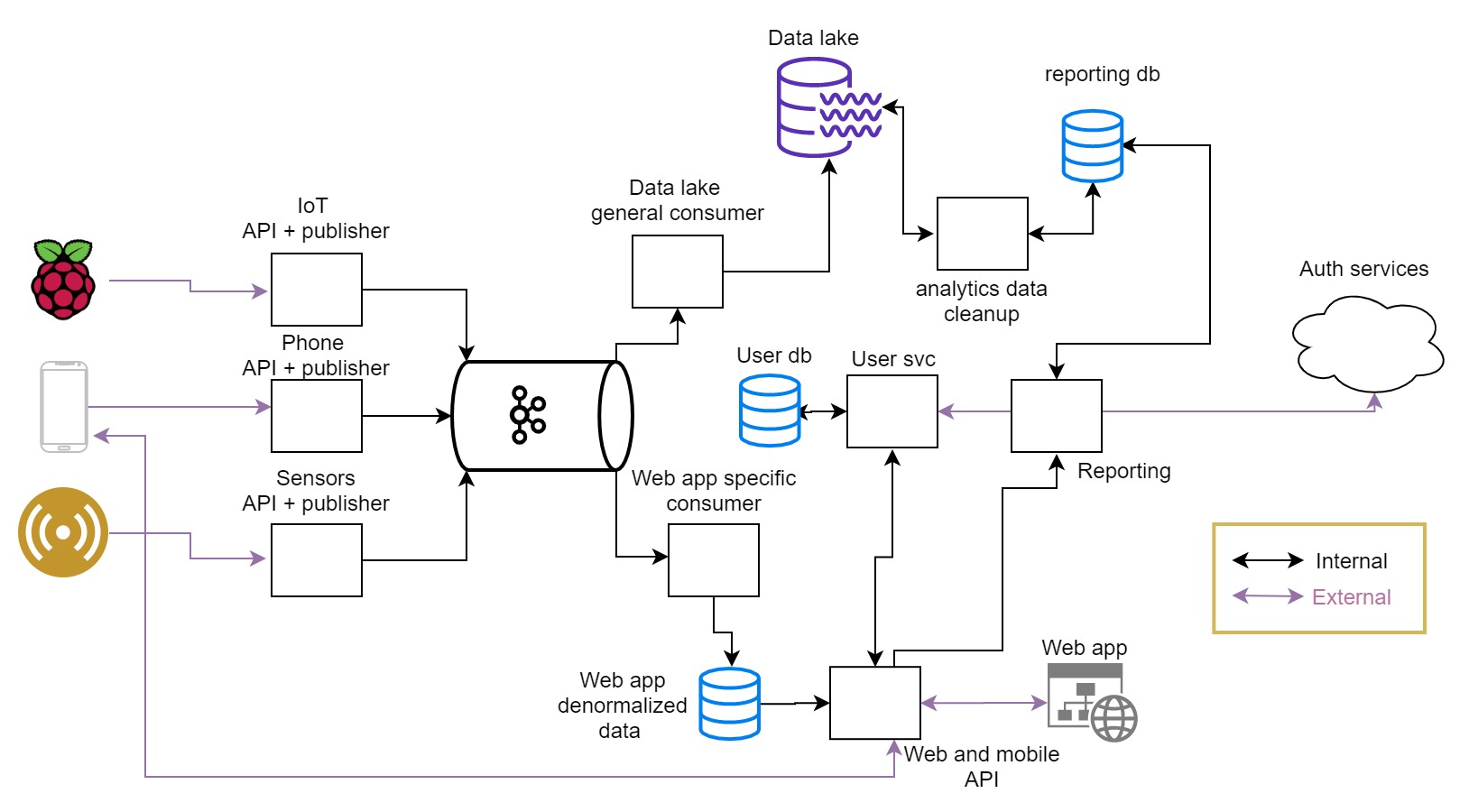

Now that, in the last article, I introduced the blueprinting case study and we started working on understanding our project, the next step is blueprinting.

For a reminder, here is the architecture diagram.

For the first full blueprint, we dive into the infrastructure for the project.

The infrastructure view

As a next step in dissecting the system design and specification, we will dive deeper into the infrastructure.

To understand the infrastructure footprint of what we are about to start building, we should first take a look at what we see as standout components that design implies.

At the first glance it looks like we got:

- Compute instances

- Ingress control components

- Databases

- Other cloud services

- CDN components

Let’s look at what we already know about infrastructure focus and preference. We are focusing on Cloud-Native methodologies with Kubernetes as the preferred infrastructure abstraction. Based on this we can easily state the following:

- Compute instances will in most of the cases be hosted on container orchestration solution which is part of Kubernetes

- Ingress control components are split between Kubernetes and cloud

- Databases can be either full cloud solutions, or hybrid Kubernetes + cloud solutions

- Apart from everything else, our system still needs a CDN, preferably somehow integrated or related to all other choices depending on different factors

- API caching solution will wait for the final decision where it belongs (on API Gateway level or outside)

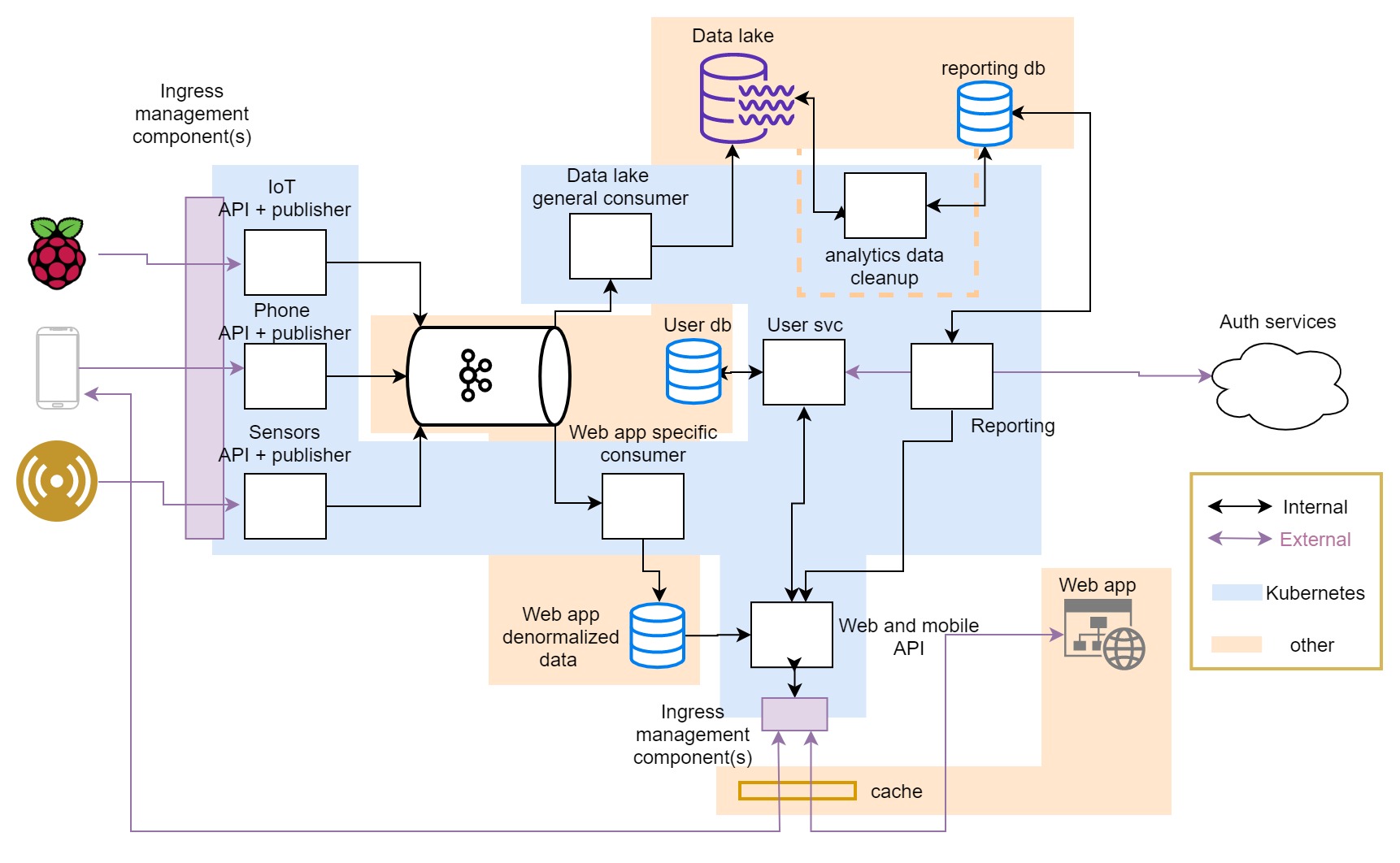

So, our basic infrastructure view will have components divided into the two major subsets - Kubernetes hosted and other, with one of the components still not fully analyzed at this level of detail.

In the picture: We got most of the components conceptually in their place in infrastructure. The dotted line shows that a particular service can be realized in multiple different ways. Ingress components (purple boxes) need deeper analysis.

So, you can decide if we are adding layers of understanding or we are peeling layers of abstraction and diving deeper into the understanding of the system; ultimately, what matters is that we are analyzing our system in-depth and providing the right perspective for the work we need to do to deliver it.

Further on, we are going to explore the exact infrastructure solutions we will be building to hold our system and help it adhere to design. We will do this by reviewing the design through a couple of essential perspectives to provide proper infra blueprint

Through the infrastructure lens

Now we are ready to put the design through another filter and figure out what is the high-level setup we will be going for, and this time it is a Kubernetes-centric review.

Looking at all the analysis we did before we should be able to go one level deeper and figure out the following:

- What are separate Kubernetes deployments we need to make

- Kubernetes services that need to be deployed

- Which deployments need an ingress controller and maybe a dedicated load balancer instance

- Which of the components that would be deployed/ran somewhere else need some special setups

- Which components will be deployed to the cloud (or potentially “raw iron” setups)

Reading annotations and visualizations

In order to keep the same architecture diagram, traceability, and concepts across the solution, but still provide additional depth to it, we will use visual notation:

- Using color-coded zones

- The blue zones are in k8s

- The yellow-orange zones are cloud solutions space

- Purple are hybrid stuff (kubernetes component + external component) that we will put through deeper analysis

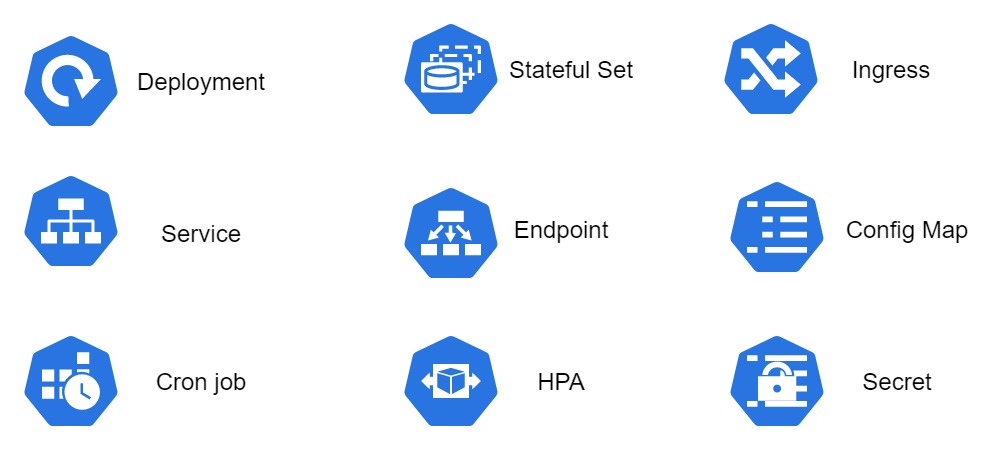

- Kubernetes components symbols

On the following chart, you can see the components symbols that we will be using in this section, it is not covering detailed Kubernetes specification, but is focused on our current case.

In the picture - The symbols for Kubernetes components used in this section

Understanding your system’s ingress control

Let’s dive deeper into those purple boxes.

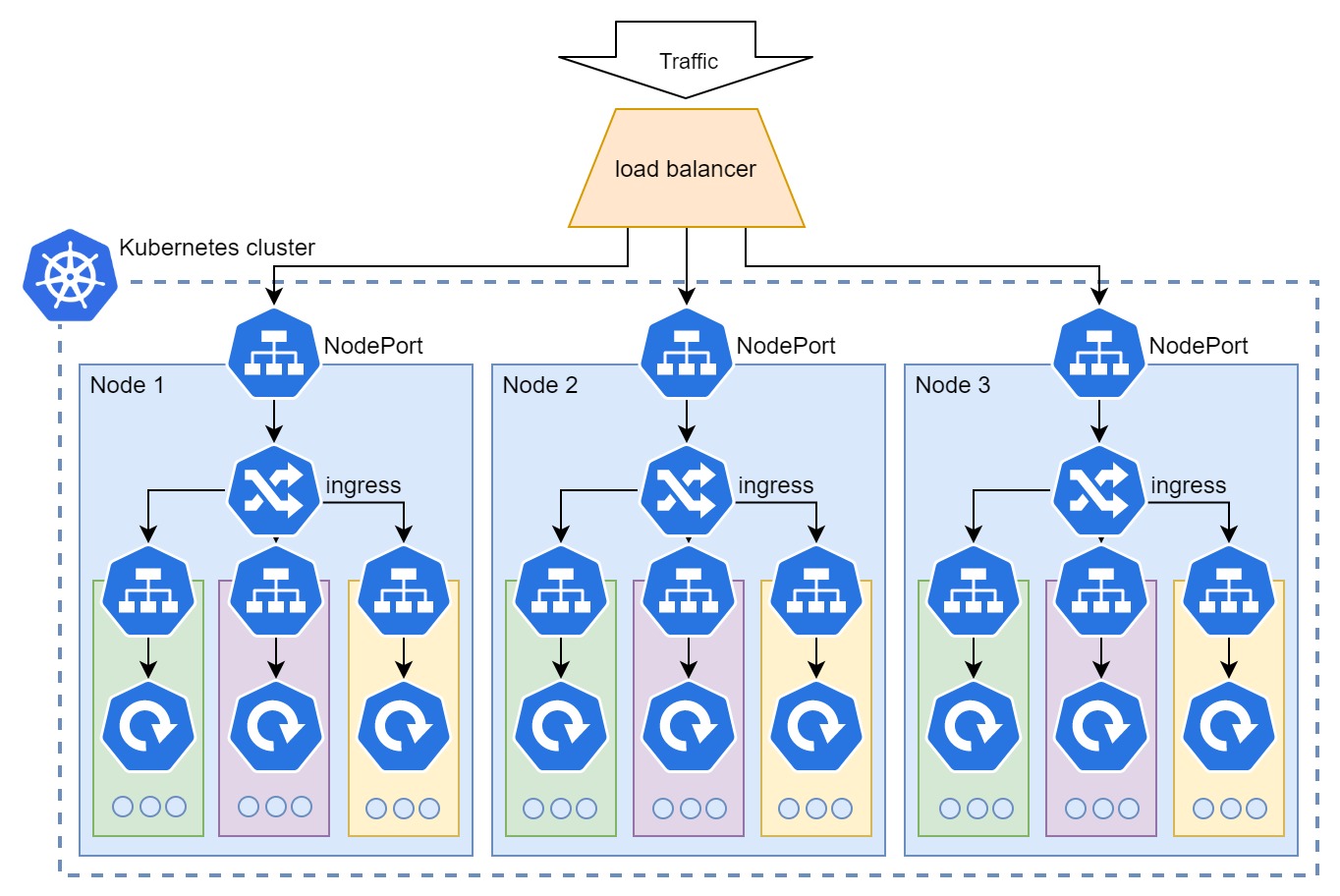

Full control and management of traffic going into your system is completely achieved through tight correlation between three components:

- Load balancer

- NodePort service

- Ingress controller

This is a synergy between Kubernetes and external solutions in a way that load balancer needs to be externally deployed, and both NodePort and Ingress controller are internal Kubernetes components.

Ingress on managed service; Depending on how your Kubernetes cluster is administered, your team will need to manage this fully or partially; if you use any of the cloud Kubernetes management solutions (GKE, EKS, AKS…) the load balancer will be provisioned, and all of the connections maintained for you, so you will care only about ingress rules.

The Kubernetes Ingress setup works as following:

- the Kubernetes cluster has a set of worker nodes that your deployments will be distributed to

- NodePort service will make sure that your ingress controller is accessible through a defined (same) port on every node

- The ingress controller adds a layer of routing and control inside the cluster (node) based on ingress rules

- LoadBalancer provides a single access point (external IP) for access to your app and balances the load across all the nodes, making the ultimate cloud-native scaling possible

You can read more about this topic in my article Internal and external connectivity in Kubernetes space.

In the picture - Way the traffic flows in and is controlled by Kubernetes’ internal services.

In the picture - Way the traffic flows in and is controlled by Kubernetes’ internal services.

Load balancer setup; as I already mentioned, a load balancer is a component external to Kubernetes that will send traffic evenly across Kubernetes worker nodes, depending on where your deployments are distributed. Altho it is an external component, an important thing to know is that there is a way of setting up the load balancer through Kubernetes services that will let you provision load balancers. This is the case in the cloud setup and is achieved by adding the annotation to the deployment manifest. This flexibility means a lot when you need an isolated ingress setup for security or scale reasons. If you are on-prem or any kind of similar setup, this is a little harder.

This is the setup that requires most work at start, at bootstrap of the cluster. As you go deeper into the delivery of the app to Kubernetes, your only concern from incoming traffic management perspective should be deploying ingress rules and scaling properly.

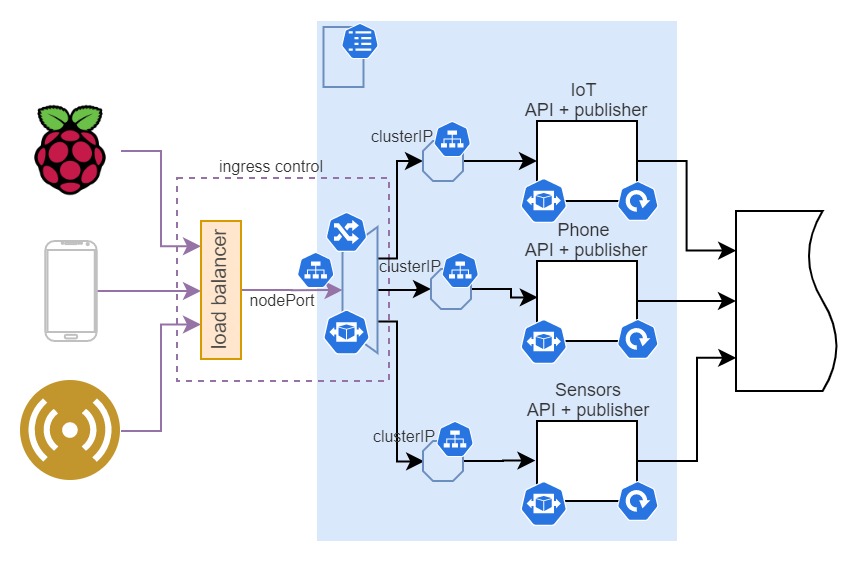

The sensor APIs

The sensor APIs are one of two externally exposed subsets, which means they require ingress, for access control, and most probably routing.

I would assume that these APIs would have simple routing rules for incoming traffic, they will just keep receiving data from the sensors continuously. For example, when traffic reaches the ingress, depending on which path is accessed, ingress will route traffic to a certain app(api):

- /api/phone - to phone API

- /api/general - to IoT API

- /api/light - to sensor API

This means that we are deploying ingress rules that route traffic based on path. To make the routing possible, we are adding a default service (named ClusterIP) to every deployment, which is giving it an in-cluster address and load balancing inside the replica set deployed.

Routing the traffic in Kubernetes ingress is possible based on path and host and their combination. Starting from version 1.18 of Kubernetes wildcard hosts are allowed.

The API microservice itself will be contained inside of the deployment which encompasses all the container orchestration components needed for this app to run. The deployment feature that needs to be additionally emphasized, as it is optional, is horizontal pod autoscaling (HPA). HPA will allow deployment to scale by increasing the number of pods in the deployment based on hardware utilization. For this part of our system, HPA is a must because of the variable nature of external traffic.

Kubernetes pod is the basic unit of scaling, it is a logical unit that represents a single instance of containerized application or multiple containerized components that are tightly coupled and deployed to Kubernetes together. It is a basic concept of the cloud-native approach to software architecture, that services are stateless so they can be deployed in the form of replica sets - a multitude of copies that traffic can be routed to and each responds in the same way to the same request.

Finally, what we can be certain about at this very moment is that any deployed service would need some level of configurability, so we assume a need to deploy config maps. This particular component is less important for the architecture itself, but we will touch on the application of some of the data objects throughout this article and beyond, so it is better to start with the introduction now.

Config Map is a Kubernetes api data object that can be used to store non-confidential data. When deployed to Kubernetes, it can be used by pods as an environmental variable, as a command-line argument, or file in a volume.

In the picture - Sensor APIs design diagram with some fundamental infrastructure setup notation

In the picture - Sensor APIs design diagram with some fundamental infrastructure setup notation

As you can see, due to the amount and type of traffic it will receive, I would recommend putting lots of emphasis on the elasticity of this part of the system. The system has to be equipped to scale up or down based on traffic patterns it is experiencing. For this reason, you can see that all three microservice deployments and ingress controller have HPA attached to them.

Scalability and Elasticity - Scalability is the ability of the system to scale up or down on demand by adding or removing resources. Elasticity is the ability to give an appropriate amount of resources to the system at any given time based on some parameters (hardware utilization, number of visitors, etc). So a scalable system can potentially be elastic if it gets autoscaling capability.

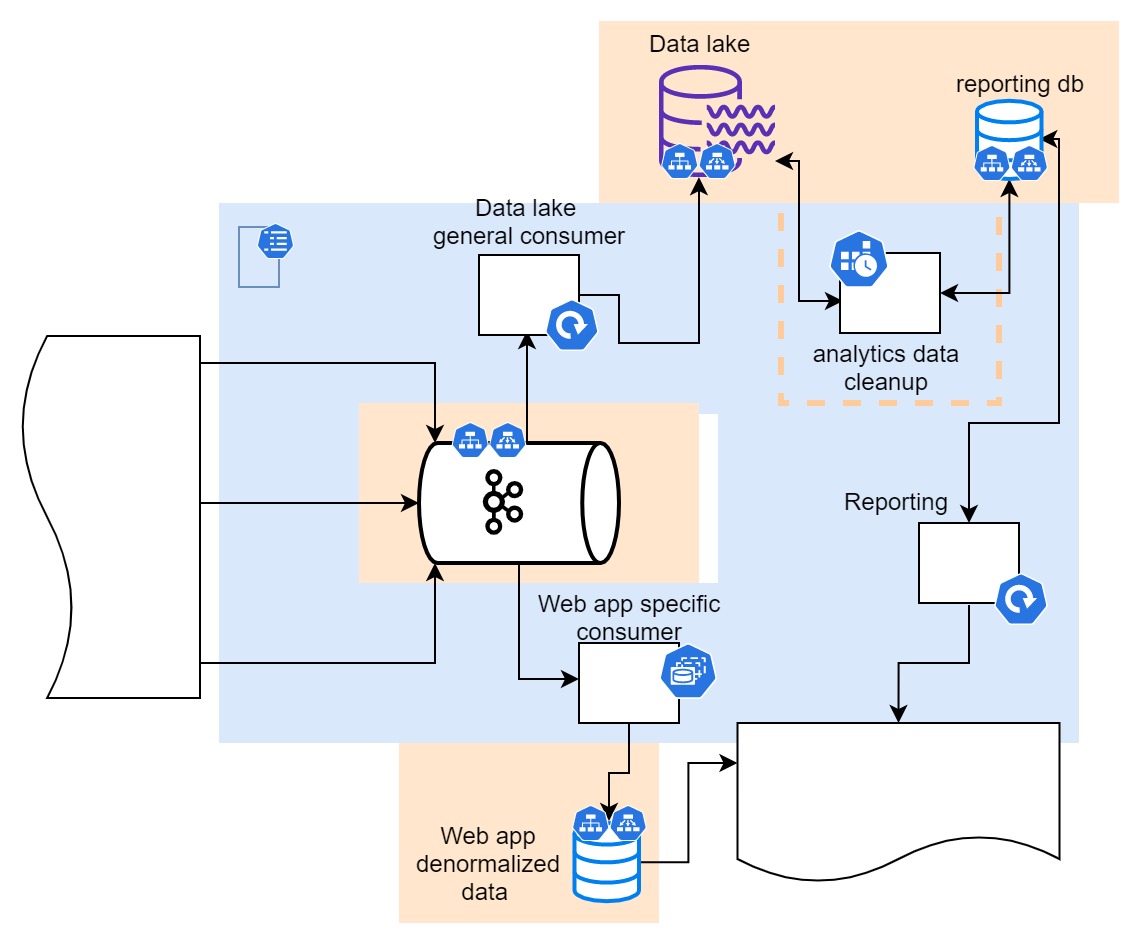

Data aggregation and processing

The data aggregation and processing part of the system is centered around external components - data storage. Everything that we deploy and run in Kubernetes in this area is actually there to get the data from one database to the next.

For this reason, one new notion we are introducing is the connection to external components. In order to standardize setups and configurations, we want to completely avoid that our software has to know the exact IP, URI, or any other kind of a name. For this, we can use two things, depending if we prefer partial or full abstraction:

- The dynamic configuration of addresses, keeping them somewhere outside of the service (DB, config map…), and reading them from the service.

- The full abstraction using the service with defined endpoints, where the name of the endpoint does not change but the endpoint represents the service proxying external address.

Endpoints are any access/connection points in Kubernetes, they go hand in hand with services and are, in most of the cases, generated automatically in the background. When we do not have an internal component to reference when creating the service, the service does not create an endpoint automatically; we can use this to create an endpoint that is connected to an external service that we want to reference. When we have this setup in place, applications deployed inside that cluster can use this endpoint to access the external component through the single endpoint.

In the picture - Part of the system in charge of storing and preparing the data

In the picture - Part of the system in charge of storing and preparing the data

In the last section, we have approached solving the problem of the influx of high volume of data in variable frequency. At the forefront of persisting that data in our system is Kafka - the distributed streaming platform that has a capacity of accepting large volumes of data in form of streams of events, and partitioning the streams for easier further consumption. The sensor APIs are producing events on streaming platforms for them to be consumed and processed by stream processors.

In this design, there are two setups for stream processors (also referred to as consumers) in regards to Kubernetes.

The basic consumer is the one that feeds the data into the data lake, the processing requirements are quite simple (we can also say they are non-existent), but it has to process and store high volumes of data (everything that came in). In this case, we can aim for a scalable consumer setup where Kafka is set up to partition data for as many consumers as it is deployed, eventually making it possible to scale data throughput to whatever level needed. For this case, we are choosing the regular (stateless) Kubernetes deployment.

A more specific stream processor is the one consuming the data we need for the frontend API. This stream processor has exact parts of the stream (topics) it is subscribing to and does specific processing of data. For this reason, data partitions are treated in exact order and the actual number of consumers is fixed and so is their deployment, so we are using stateful set deployment in order to keep the exact number and order of consumer pods.

The stateful set is Kubernetes deployment designed to accommodate applications for which the internal state is essential for functioning. Same as deployment it works with identical container specifications for all the pods deployed but, unlike regular deployment, it provides a guarantee of pod identity and ordering of pods. The purpose is to always confirm that a stateful app that is being deployed continues to function properly after every potential disturbance by just redeploying any of the pods in the set with exact identity and in the right place.

As data lands in the data lake and frontend API database, the speed at which data is arriving starts to have less influence on what the system is doing afterward.

The database with denormalized data for web and mobile applications is ready to be consumed by downstream systems, but raw data from the data lake still has to be analyzed and analytics reports need to be created.

What specification tells us is that this is periodical analytics of the data available in the data lake that is being written to yet another database that is going to be used to provide reporting API. For this purpose, we will go with cron job running in configurable intervals.

We finish this part off by looking at the reporting data availability which by design needs to be established through an interface provided by reporting microservice. Nothing special here, just a regular Kubernetes deployment and no need for outside-facing networking components since this interface will be used by services deployed in the internal network.

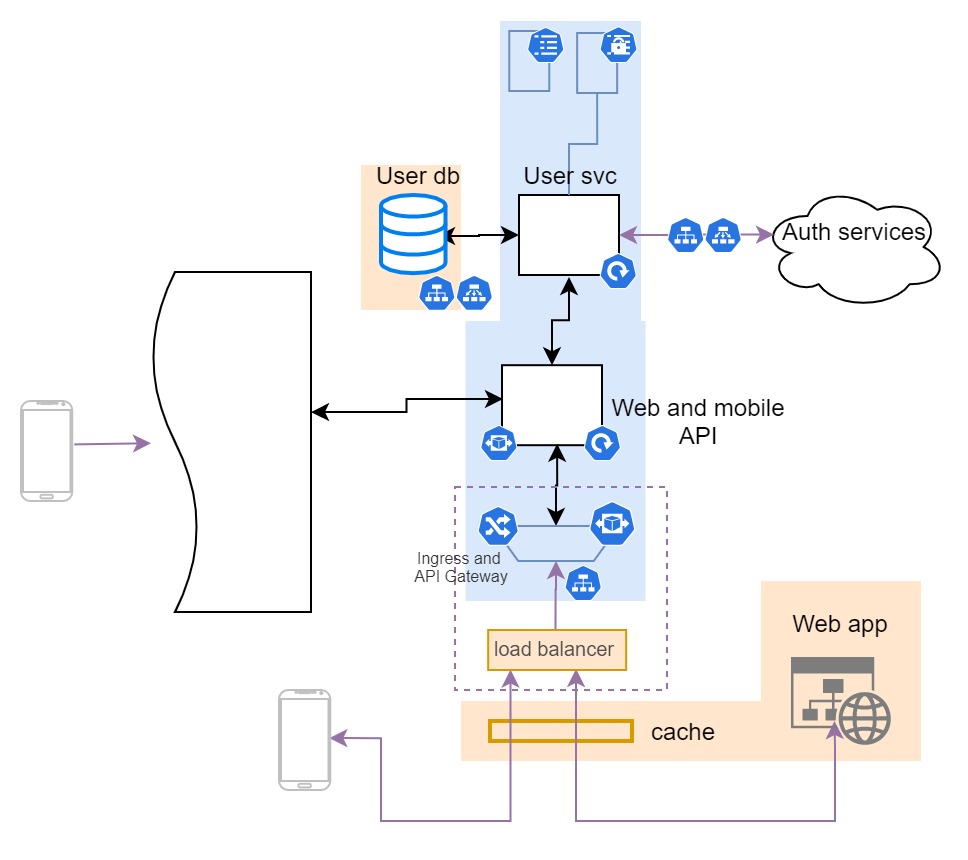

2.5.5 Providing the data

The data provider section of the system is oriented towards serving data to web and mobile applications, so it is expected that the data which is flowing through the connections is mostly content for the UI. In the majority of cases, it would be numerical data for the analytics dashboards, but also user data, preferences, and authentication.

Design-wise, let’s note new considerations before we go through the full picture:

- The Ingress component of the system now needs to also have api gateway functionality to comply with the requirement regarding the control and monitoring from the aspect of API consumer

- System connects to external authentication service

- There is a database used for user data

- We need some secrets (API key(s)) deployed and rotated, so we’re going to assume that they exist in the form of Kubernetes secrets

Kubernetes secrets are basic data objects similar to ConfigMaps, but with super basic encryption of the content. All the content needs to be base64 encrypted when deployed. Any deployment has the ability to reference a certain deployed secret and connect to it, in this case, Kubernetes will decode base64 and the app would get a decoded version of the secret.

This part of the system connects to two external components (this time in a broader sense) - the User database and authentication service (that is why broader sense, it is SaaS and not a component). For these, we should deploy custom endpoints with Kubernetes services on top of them to help make microservices agnostic to external addresses and utilize Kubernetes features in the best way possible.

For config and sensitive data management, we would deploy datasets in two different forms - secrets and config maps. The deployment of both would be totally different stories and my plan is to cover these topics soon, in other articles.

The last part of the setup is content that lives in CDN (content delivery network) or a similar set of components. There are two parts to this content - the web app frontend (what browsers are downloading) and api caching. No matter how it is deployed and done we have to be mindful of these two things. It is usually caching rules and compressed build artifacts (js, CSS, images…).

Where to cache. There are multiple ways to handle performant API responses, in-app persistent layer (graphQL, Redis, Memcached), API gateway caching, and edge caching, to name a few. The mentioned methods work quite well together on the same app as well since they mostly solve different problems. An especially important one for me is edge caching because it is bringing the cache closest to the user which has the biggest performance enhancement but is also (if configured correctly) a great security and DDoS attack protection.

Some notes and further setup

Throughout this article I have demonstrated one of the approaches to designing the infrastructure part of your project. I wanted to do it in a way that will provide you with some ideas and guidance for your future and existing projects using a real example, and this is giving us lots of new things to explore.

There are still lots of aspects I have not touched, and in the next article, I will dive into networking setup and security in general (from software technology agnostic side.)

Comments