Starting a project the right way needs some good foundation. It needs the material for that engineering meeting by the toolbox every morning.

Starting a project the right way needs some good foundation. It needs the material for that engineering meeting by the toolbox every morning.

Coming off the discussion on being Native to the Cloud and my insights into the two scales of DevOps, in this and following articles I want to commit to doing everything through the case study in order to bring the most value to you.

Blueprinting your project

By definition blueprint is a design plan or another technical drawing

The blueprint focuses on providing direct, well-defined, and best as possible problem solutions. These will be primarily designated to be building blocks for a plan - technical layers of the development project setup.

In our case these will be blueprints for Kubernetes-centered projects, so every solution would be going in direction of how to tackle the specific problem or process planning in that regard.

So, building and delivering software defined by the system specification and architecture is, obviously, our essential work. But, what are the essential things you need to choose and figure out to make this reality? What are the things that come to mind first?

We need:

- Choice of software engineering technology and methodology (programming language, database, and so on).

- Choice of infrastructure - we already know that we chose the Kubernetes ecosystem, we will decide on everything else along the way.

- Everything else (:troll_face:)

Everything else is actually what defines how quickly you move forward, and how long you can keep going forward. It is how you enhance your design, how you keep evolving it, how you support any future changes, how you deliver your business value, how you monitor and react to problems (maintain business value), etc. It is everything, as in - it is your approach to solving (and scaling) the two scales of DevOps.

Decision making - in real-life, emergent design setting, and/or taking any of the evolutionary approaches, essential skill that will give you edge is ability to make a decision in last responsible moment. That is the way to make maximum out of your design and decision making process by gathering maximal amount of data and experience.

The core of delivering any software system is how you design, plan, and execute; how do you map architecture to your process and infrastructure; how do you secure it and make sure it is resilient and adherent to necessary performance standards. Blueprint needs to teach you how to place essential focus points so you can manage the solutions you need.

All of this is embedded in your design, infrastructure, and tooling and supported by technologies you decided to build software in. Technologies might change over time, even the architecture, but if the initial plan, infrastructure in place, delivery process, and quality focus is defined well it will be easy and smooth to do even these kinds of transitions.

The blueprints for every aspect of your project should give you a good way forward and an idea of how to, not only set up a project, but evolve it over time, or approach its evolution. It is giving you setup and way forward for driving your project in an emergent design way.

This post will cover:

- The architecture of the system we want to work on for this case study.

- Specification and architecture checklist to confirm that we are received all the information we need to move forward.

- High-level architecture analysis from NFR perspective - intro for coming blueprints.

- Initial list of blueprints this series will provide.

So, let’s start.

The case study

We will be diving into a greenfield microservice project. All of the concepts are applicable to so-called brownfield, too, and at some point I might try to cover the differences from a purely experiential side.

In the same way as I would never promise the ultimate solution (for me the only ultimate solution is the wheel), I will never make a mistake and start a debate on which task is more complex. There are many dimensions complexity may or may not depend on, but for the sake of having less argument about these topics - we go with something that can be a medium complexity greenfield project with enough structure for a good narrative :).

For this case study, I will be looking at a solution that is a part of an internet of things ecosystem with thousands of sensors around the world and front end applications connected to it for management and monitoring. The solution is the system that crunches and stores data, provides a number of APIs to manage sensory data, and get various services to apps (web and mobile) and integrated systems.

The specification

Here I will provide you with excerpts from the detailed system specification. These cutouts will be key points of the spec that are influencing our work. It is not deal in terms of thinking space, but actual thought experiment has to be streamlined for us not to lose the focus.

The sensor APIs

A set of APIs needs to be provided to receive sensory data from three types of devices:

- Small on-site sensor arrays connected to raspberry PI devices streaming normalized data it receives from sensors in the buildings

- Low bandwidth internet-connected sensors in machines

- Mobile phone applications.

Scaling - Different types of devices have different scaling and security demands that would be listed in separate part of the specification. It is important for us to know that almost every component in this system has a different scale and throughput demands.

The system needs to be capable of handling a high influx of data in certain periods of time based on both daily patterns of usage and different events throughout the year.

Data aggregation and processing

The system needs to provide a way to store big amounts of data really quickly and have the capacity to do fast processing of data flowing in.

The collection system has to be capable of processing and storing data for different purposes. As part of the MVP data has to be processed and stored for two purposes:

- Data Lake to accommodate for internal business analytics team’s needs and for any future data usage.

- Relational database to be used for the purpose of serving data to web and mobile applications.

Additional data storage has to be provided for user profile data. This data is to be used for user authentication and experience purposes and has to be treated with special attention to the security of data.

Providing the data

In addition to the APIs receiving data from different kinds of devices, a set of frontend APIs should be provided to supply web and mobile applications with necessary data.

This API should be fast and highly available since it will be used in applications directly facing the consumer.

In order to avoid unauthorized usage of the APIs, the ability to control access and usage should be provided. For this purpose, the system should be able to make a distinction between different applications consuming the data and act according to specific app requirements.

The Diagram

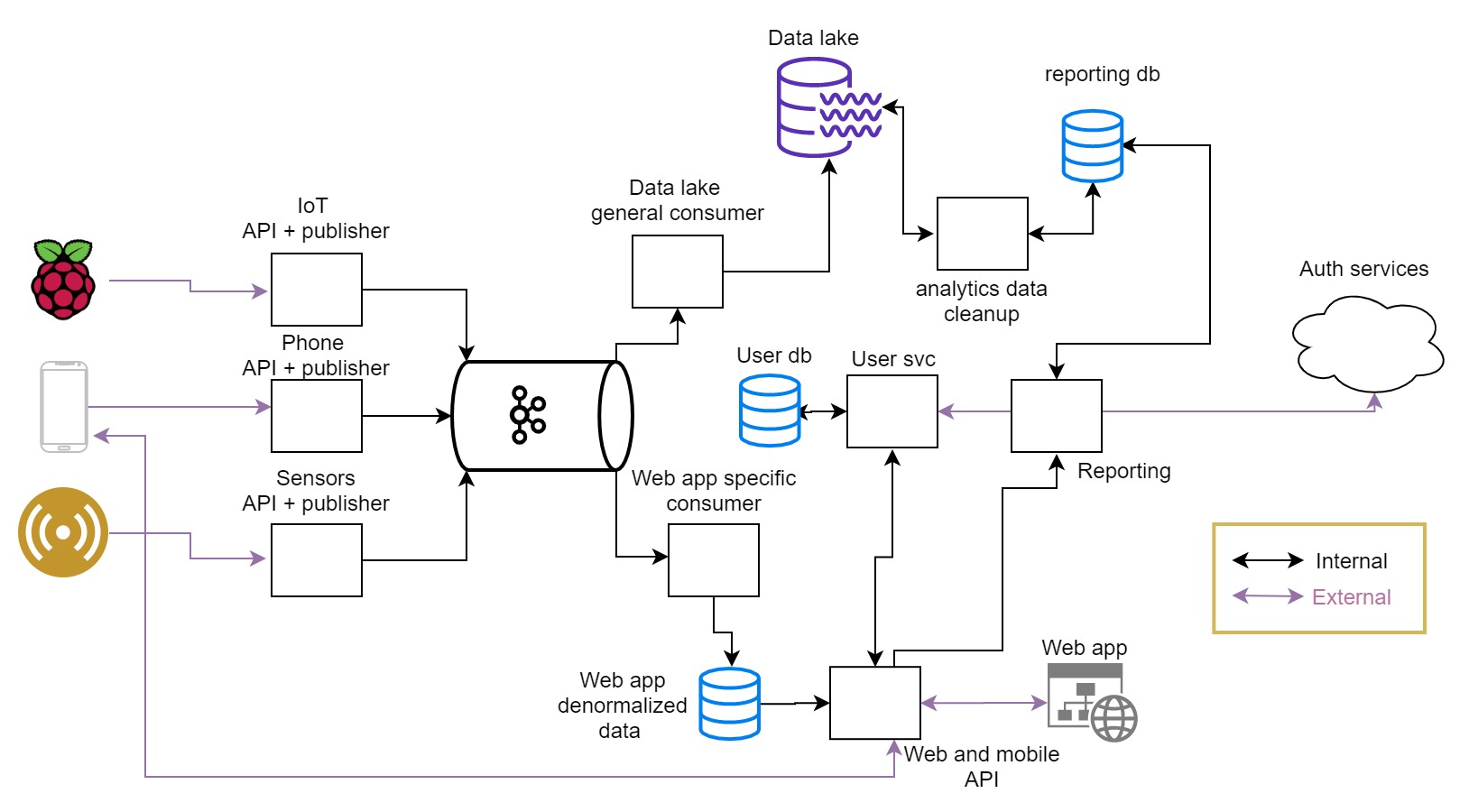

The software architecture diagram

Product and technical specification - These two information packages are consequent to each other. The product specification is written as the new feature request that is probably not (or at least not fully) technically understood. At the point where product specification is ready, request gathering of some sort usually commences. At this point tech lead or architect (or both) join the party to figure out the base of technical specification through series of (usually long) interviews and dialogues with stakeholders. Information provided in this subsection is part of further feature specification.

Looking at the specification, you can’t see much until the Architect went through requirements with business counterparts and has provided the team with enhanced specification. It would be enhanced by adding technical components to product specification and multiple diagrams describing data flows, entity relations, system structure etc.

Apart from the specification detail already stated before, the part that is important for our further involvement in system development is one of the architecture diagrams that contains information about the general architecture of the system. Giving no preference to a particular diagram style (going fully free form, if you want :D), the following diagram represents our system.

In the Picture - Architecture diagram showing APIs that receive data from 3 types of devices, publishing data to streaming system to be consumed and denormalized in a few ways and served (back) to different applications

In the Picture - Architecture diagram showing APIs that receive data from 3 types of devices, publishing data to streaming system to be consumed and denormalized in a few ways and served (back) to different applications

This diagram and written specification is the base for the further creation of the system’s architecture, delivery pipeline, process plant, and every imaginable technical component that is going to help develop, deliver and run application code.

For the above reason, we have to assure base information we are working with is proper.

Making sure the architecture diagram is right

Whatever diagram style or flavor/version you end up with, for it to be fully applicable it has to have few basic characteristics that would guarantee that you have a clear picture of system’s needs (or which parts you would eventually need more details about).

When receiving an architecture diagram, my advice would be to always scrutinize it using the following set of questions:

- Are connections between components clear from the networking perspective?

- Is the nature of all the connections clear (like internal network, secure communication, outside traffic, and so on)?

- Is it clear which components are developed and delivered by us and which are platforms or apps (XaaS) that the system is connecting to?

- If the design consists of multiple diagrams (which generally it should), can you trace all the connections and flows between diagrams?

- Is the level of detail consistent throughout the diagram and is legend present to help understand visual cues?

- If there are additional security requirements is that noted in the diagram or in specification?

Level of detail. It is more important for the diagram to have a consistent level of detail than to have all the details. The magic of a consistent level of detail is that it allows you to always be aware of its capacity and immediately know where you would eventually need more detail. The problem with inconsistent levels of detail is that you can easily neglect some places where you miss data and just take it as a full requirement, eventually ending up in failure to deliver, need for rework, or any kind of other failures.

Reading the spec

Now that we made sure we received a good specification and architecture diagrams with the right level of detail, to kick this off we can read it from the non functional requirements perspective, making the first holistic review. We will do this review using initial system segregation.

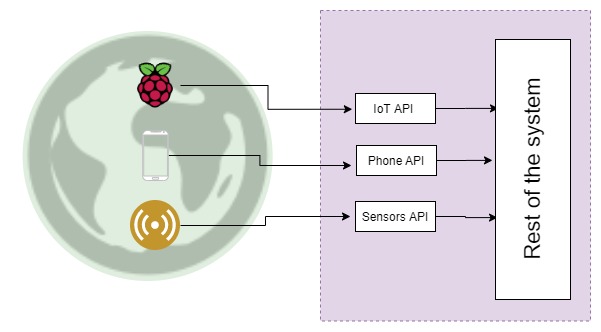

The sensor APIs

It is obvious that sensor APIs represent a set of microservices, exposed to the open internet, with potentially large amounts of traffic flowing only in.Following is conceptual diagram, visually enhanced for discussion purpose, it is not the way I recommend you to draw your diagrams but rather the way to think about this particular one.

In the picture - Sensor arrays, IoT devices, and mobile devices around the world will be continuously sending data to our system

In the picture - Sensor arrays, IoT devices, and mobile devices around the world will be continuously sending data to our system

Ingress. In this case, we observe that the any outwards facing system (it will mostly be the APIs), will need to be exposed through some kind of ingress layer, or layers (Layer 7 load balancer plus the Kubernetes ingress for example).

Before detailed architecture diagram analysis,we can predict that these components need elasticity to accommodate the amount of traffic it will receive and especially because of traffic coming from the outside organically, which is impossible to control and sometimes hard to predict.

Elasticity. In the cloud computing elasticity is one of the first big concepts introduced (also, where “elastic” part in names of AWS components comes from), it is capability of the infrastructure or a platform (as a service in this case) to adopt to a certain workload by tweaking resource utilization. So our architecture now has the capability to manage its resource reservation in quite a flexible way, which changed the game.

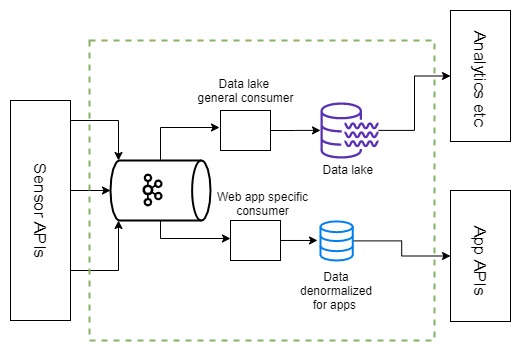

Data aggregation and processing

Looking at the spec, the data aggregation and processing part of the system does not have any need for outside connection, which will have implications on networking setup.

In the picture - Based on the specification of the system, the architect decided to go with an event-based queue/storage system in form of Kafka (which is the only strictly decided technology btw) and have API microservices constantly publish to Kafka while we will develop a set of consumers to deal with preparing the data for different needs.

In the picture - Based on the specification of the system, the architect decided to go with an event-based queue/storage system in form of Kafka (which is the only strictly decided technology btw) and have API microservices constantly publish to Kafka while we will develop a set of consumers to deal with preparing the data for different needs.

On the other side, depending on the technology of choice and sensor API architecture choices, we expect two things:

- That this part of the system would contain a database or some other persistence layer capable of accepting a big amount of data and facilitating its further processing.

- That this part of the system creates many internal connections.

Denormalization is the method of changing the structure of the data to accommodate some specific purpose, quite usually better read performance.

In our case, we have an event-based datastore holding the data in form of events; this form is allowing for fast write and has good capacity to look at how some of the data looked over time because all events on top of some state (in this example state of one sensor) are saved in discrete steps whenever a change happens. Denormalization in our case is read from event stream and data decomposition to show data that would be relevant for front-end applications, in this case, we need performant reads so we would go for SQL or NoSQL database and just write to it in the desired form merging all the events together to form this new data structure.

In terms of scale, parts of the system that are directly connected to processing the data that is coming in from the sensors would have proportional scaling needs. Other parts of the systems that are doing independent data processing (reporting, daily crunching of data, and so on) would be scaled just based on operational needs which would be dictated by needed speed.

A data lake is a single store that holds as much as possible, or all of the enterprise data mostly for the purpose of analytics and reporting. Most of the data in this storage is in its natural (raw) form and it is just dumped into this storage for further processing and analysis.

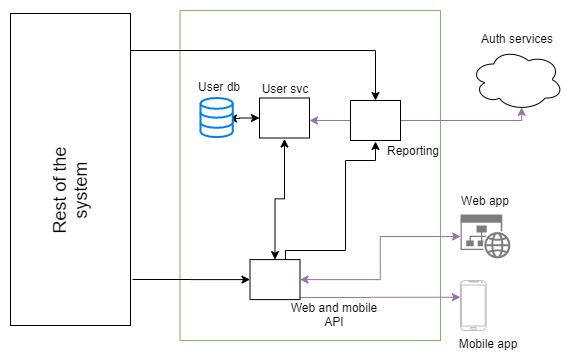

Providing the data

This is another outwards facing layer, this time with the bidirectional flow of data.

Since it provides the data for web and mobile applications, we would also need to prepare for scale and make the system elastic.

In the picture - The outwards facing layer provides the APIs for web and mobile applications, The API microservice is integrated to the data processing part for all the content/app data, to Reporting microservice for reporting and analytics, and to_ User service_ for user Authentication and data.

In the picture - The outwards facing layer provides the APIs for web and mobile applications, The API microservice is integrated to the data processing part for all the content/app data, to Reporting microservice for reporting and analytics, and to_ User service_ for user Authentication and data.

Apart from having other internal services to integrate with, this system will handle sensitive user data potentially including personally identifiable information (PII), payment data, and so on. This implies that apart from already stated needs. These APIs would have some security considerations.

Additionally, response times of these APIs are strongly impacting the user experience of the applications it is serving. This means that special attention has to be paid to the infrastructure component of api performance.

As a last observation before wrapping up, we have to note that this is a multi-consumer system. Multi consumer API system implies standardization, consumer identification (per client authentication and traceability), and consumption control. These are the things that would have to be specially addressed.

Summary and next steps

After the first detailed pass through specification and overall architecture, we:

- Made sure that generally everything is there and at aligned level of detail

- Created a good initial analysis and with it drafted the topics we have to plan around.

We can now get into detailed blueprinting of different aspects of this project. More on that in the next article that will get into the infrastructure for this system.

What more can you expect in the future (not a complete list):

- Infrastructure blueprint.

- Observability blueprint, analysis, and recommendations.

- CICD and delivery process blueprint and recommendations.

- Additional SRE specific discussions and tooling concepts.

- Some topics like security and quality assurance will be embedded into other blueprints depending on the topic.

So, many more technical discussions coming <3

Comments